You are currently browsing the tag archive for the ‘PCA’ tag.

[September 2, 2017: A response to this post has been posted by the authors of Patro et al. 2017, and I have replied to them with a rebuttal]

Spot the difference

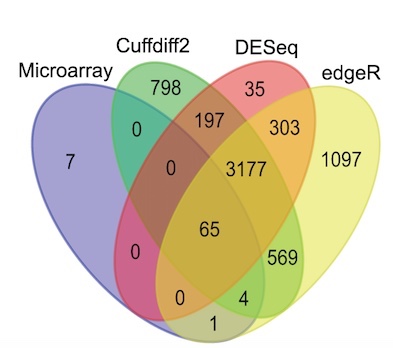

One of the maxims of computational biology is that “no two programs ever give the same result.” This is perhaps not so surprising; after all, most journals seek papers that report a significant improvement to an existing method. As a result, when developing new methods, computational biologists ensure that the results of their tools are different, specifically better (by some metric), than those of previous methods. The maxim certainly holds for RNA-Seq tools. For example, the large symmetric differences displayed in the Venn diagram below (from Zhang et al. 2014) are typical for differential expression tool benchmarks:

In a comparison of RNA-Seq quantification methods, Hayer et al. 2015 showed that methods differ even at the level of summary statistics (in Figure 7 from the paper, shown below, Pearson correlation was calculated using ground truth from a simulation):

These sort of of results are the norm in computational genomics. Finding a pair of software programs that produce identical results is about as likely as finding someone who has won the lottery… twice…. in one week. Well, it turns out there has been such a person, and here I describe the computational genomics analog of that unlikely event. Below are a pair of plots made using two different RNA-Seq quantification programs:

The two volcano plots show the log-fold change in abundance estimated for samples sequenced by Boj et al. 2015, plotted against p-values obtained with the program limma-voom. I repeat: the plots were made with quantifications from two different RNA-Seq programs. Details are described in the next section, but before reading it first try playing spot the difference.

The reveal

The top plot is reproduced from Supplementary Figure 6 in Beaulieu-Jones and Greene, 2017. The quantification program used in that paper was kallisto, an RNA-Seq quantification program based on pseudoalignment that was published in

- Near-optimal probabilistic RNA-Seq quantification by Nicolas Bray, Harold Pimentel, Páll Melsted and Lior Pachter, Nature Biotechnology 34 (2016), 525–527.

The bottom plot was made using the quantification program Salmon, and is reproduced from a GitHub repository belonging to the lead author of

- Salmon provides fast and bias-aware quantification of transcript expression by Rob Patro, Geet Duggal, Michael I. Love, Rafael A. Irizarry and Carl Kingsford, Nature Methods 14 (2017), 417–419.

Patro et al. 2017 claim that “[Salmon] achieves the same order-of-magnitude benefits in speed as kallisto and Sailfish but with greater accuracy”, however after being unable to spot any differences myself in the volcano plots shown above, I decided, with mixed feelings of amusement and annoyance, to check for myself whether the similarity between the programs was some sort of fluke. Or maybe I’d overlooked something obvious, e.g. the fact that programs may tend to give more similar results at the gene level than at the transcript level. Thus began this blog post.

In the figure below, made by quantifying RNA-Seq sample ERR188140 with the latest versions of the two programs, each point is a transcript and its coordinates are the estimated counts produced by kallisto and salmon respectively.

Strikingly, the Pearson correlation coefficient is 0.9996026. However astute readers will recognize a possible sleight of hand on my part. The correlation may be inflated by similar results for the very abundant transcripts, and the plot hides thousands of points in the lower left-hand corner. RNA-Seq analyses are notorious for such plots that appear sounds but can be misleading. However in this case I’m not hiding anything. The Pearson correlation computed with is still extremely high (0.9955965) and the Spearman correlation, which gives equal balance to transcripts irrespective of the magnitude of their counts is 0.991206. My observation is confirmed in Table 3 of Sarkar et al. 2017 (note that in this table “quasi-mapping” corresponds to Salmon):

For context, the Spearman correlation between kallisto and a truly different RNA-Seq quantification program, RSEM, is 0.8944941. At this point I have to say… I’ve been doing computational biology for more than 20 years and I have never seen a situation where two ostensibly different programs output such similar results.

Patro and I are not alone in finding that Salmon kallisto (if kallisto and Salmon gave identical results I would write that Salmon = kallisto but in lieu of the missing 0.004 in correlation I use the symbol

to denote the very very strong similarity). Examples in the literature abound, e.g. Supplementary Figure 5 from Majoros et al. 2017 (shown later in the post), Figure 1 from Everaert et al. 2017

or Figure 3A from Jin et al. 2017:

Just a few weeks ago, Sahraeian et al. 2017 published a comprehensive analysis of 39 RNA-Seq analysis tools and performed hierarchical clusterings of methods according to the similarity of their output. Here is one example (their Supplementary Figure 24a):

Amazingly, kallisto and Salmon-Quasi (the latest version of Salmon) are the two closest programs to each other in the entire comparison, producing output even more similar than the same program, e.g. Cufflinks or StringTie run with different alignments!

This raises the question of how, with kallisto published in May 2016 and Salmon kallisto, Patro et al. 2017 was published in one of the most respected scientific publications that advertises first and foremost that it “is a forum for the publication of novel methods and significant improvements to tried-and-tested basic research techniques in the life sciences.” ?

How not to perform a differential expression analysis

The Patro et al. 2017 paper presents a number of comparisons between kallisto and Salmon in which Salmon appears to dramatically improve on the performance of kallisto. For example Figure 1c from Patro et al. 2017 is a table showing an enormous performance difference between kallisto and Salmon:

Figure 1c from Patro et al. 2017.

At a false discovery rate of 0.01, the authors claim that in a simulation study where ground truth is known Salmon identifies 4.5 times more truly differential transcripts than kallisto!

This can explain how Salmon was published, namely the reviewers and editor believed Patro et al.’s claims that Salmon significantly improves on previous work. In one analysis Patro et al. provide a p-value to help the “significance” stick. They write that “we found that Salmon’s distribution of mean absolute relative differences was significantly smaller (Mann-Whitney U test, P=0.00017) than those of kallisto. But how can the result Salmon >> kallisto, be reconciled with the fact that everybody repeatedly finds that Salmon kallisto?

A closer look reveals three things:

- In a differential expression analysis billed as “a typical downstream analysis” Patro et al. did not examine differential expression results for a typical biological experiment with a handful of replicates. Instead they examined a simulation of two conditions with eight replicates in each.

- The large number of replicates allowed them to apply the log-ratio t-test directly to abundance estimates based on transcript per million (TPM) units, rather than estimated counts which are required for methods such as their own DESeq2.

- The simulation involved generation of GC bias in an approach compatible with the inference model, with one batch of eight samples exhibiting “weak GC content dependence” while the other batch of eight exhibiting “more severe fragment-level GC bias.” Salmon was run in a GC bias correction mode.

These were unusual choices by Patro et al. What they did was allow Patro et al. to showcase the performance of their method in a way that leveraged the match between one of their inference models and the procedure for simulating the reads. The showcasing was enabled by having a confounding variable (bias) that exactly matches their condition variable, the use of TPM units to magnify the impact of that effect on their inference, simulation with a large number of replicates to enable the use of TPM, which was possible because with many replicates one could directly apply the log t-test. This complex chain of dependencies is unraveled below:

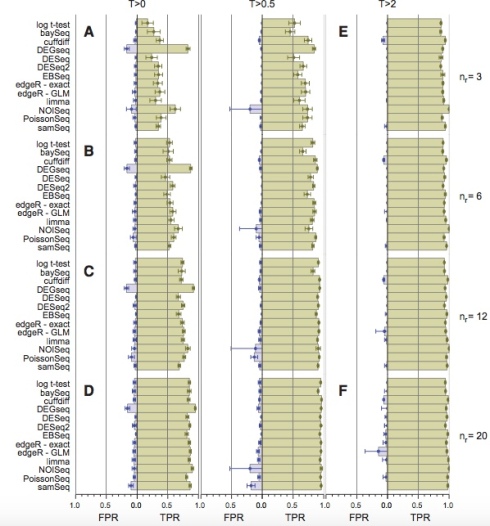

There is a reason why log-fold changes are not directly tested in standard RNA-Seq differential expression analyses. Variance estimation is challenging with few replicates and RNA-Seq methods developers understood this early on. That is why all competitive methods for differential expression analysis such as DESeq/DESeq2, edgeR, limma-voom, Cuffdiff, BitSeq, sleuth, etc. regularize variance estimates (i.e., perform shrinkage) by sharing information across transcripts/genes of similar abundance. In a careful benchmarking of differential expression tools, Shurch et al. 2016 show that log-ratio t-test is the worst method. See, e.g., their Figure 2:

Figure 2 from Schurch et al. 2016. The four vertical panels show FPR and TPR for programs using 3,6,12 and 20 biological replicates (in yeast). Details are in the Schurch et al. 2016 paper.

The log-ratio t-test performs poorly not only when the number of replicates is small and regularization of variance estimates is essential. Schurch et al. specifically recommend DESeq2 (or edgeR) when up to 12 replicates are performed. In fact, the log-ratio t-test was so bad that it didn’t even make it into their Table 2 “summary of recommendations”.

The authors of Patro et al. 2017 are certainly well-aware of the poor performance of the log-ratio t-test. After all, one of them was specifically thanked in the Schurch et al. 2016 paper “for his assistance in identifying and correcting a bug”. Moreover, the recommended program by Schurch et. al. (DESeq2) was authored by one of the coauthors on the Patro et al. paper, who regularly and publicly advocates for the use of his programs (and not the log-ratio t-test):

This recommendation has been codified in a detailed RNA-Seq tutorial where M. Love et al. write that “This [Salmon + tximport] is our current recommended pipeline for users”.

In Soneson and Delorenzi, 2013, the authors wrote that “there is no general consensus regarding which [RNA-Seq differential expression] method performs best in a given situation” and despite the development of many methods and benchmarks since this influential review, the question of how to perform differential expression analysis continues to be debated. While it’s true that “best practices” are difficult to agree on, one thing I hope everyone can agree on is that in a “typical downstream analysis” with a handful of replicates

do not perform differential expression with a log-ratio t-test.

Turning to Patro et al.‘s choice of units, it is important to note that the requirement of shrinkage for RNA-Seq differential analysis is the reason most differential expression tools require abundances measured in counts as input, and do not use length normalized units such as Transcripts Per Million (TPM). In TPM units the abundance for a transcript t is

where

are the estimated counts for transcript t,

is the (effective) length of t and N the number of total reads. Whereas counts are approximately Poisson distributed (albeit with some over-dispersion), variance estimates of abundances in TPM units depend on the lengths used in normalization and therefore cannot be used directly for regularization of variance estimation. Furthermore, the dependency of TPM on effective lengths means that abundances reported in TPM are very sensitive to the estimates of effective length.

This is why, when comparing the quantification accuracy of different programs, it is important to compare abundances using estimated counts. This was highlighted in Bray et al. 2016: “Estimated counts were used rather than transcripts per million (TPM) because the latter is based on both the assignment of ambiguous reads and the estimation of effective lengths of transcripts, so a program might be penalized for having a differing notion of effective length despite accurately assigning reads.” Yet Patro et al. perform no comparisons of programs in terms of estimated counts.

A typical analysis

The choices of Patro et al. in designing their benchmarks are demystified when one examines what would have happened had they compared Salmon to kallisto on typical data with standard downstream differential analysis tools such as their own tximport and DESeq2. I took the definition of “typical” from one of the Patro et al. coauthors’ own papers (Soneson et al. 2016): “Currently, one of the most common approaches is to define a set of non-overlapping targets (typically, genes) and use the number of reads overlapping a target as a measure of its abundance, or expression level.”

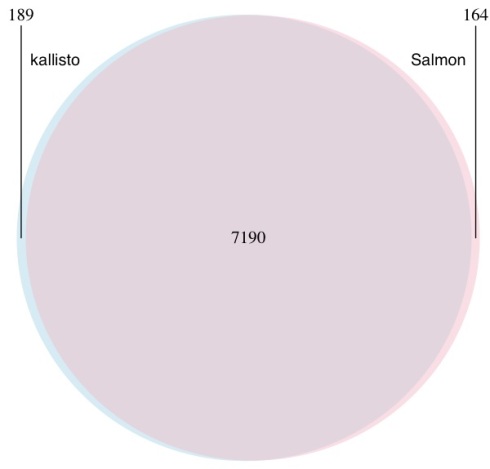

The Venn diagram below shows the differences in transcripts detected as differentially expressed when kallisto and Salmon are compared using the workflow the authors recommend publicly (quantifications -> tximport -> DESeq2) on a typical biological dataset with three replicates in each of two conditions. The number of overlapping genes is shown for a false discovery rate of 0.05 on RNA-Seq data from Trapnell et al. 2014:

A Venn diagram showing the overlap in genes predicted to be differential expressed by kallisto (blue) and Salmon (pink). Differential expression was performed with DESeq2 using transcript-level counts estimated by kallisto and Salmon and imported to DESeq2 with tximport. Salmon was run with GC bias correction.

This example provides Salmon the benefit of the doubt- the dataset was chosen to be older (when bias was more prevalent) and Salmon was not run in default mode but rather with GC bias correction turned on (option –gcBias).

When I saw these numbers for the first time I gasped. Of course I shouldn’t have been surprised; they are consistent with repeated published experiments in which comparisons of kallisto and Salmon have revealed near identical results. And while I think it’s valuable to publish confirmation of previous work, I did wonder whether Nature Methods would have accepted the Patro et al. paper had the authors conducted an actual “typical downstream analysis”.

What about the TPM?

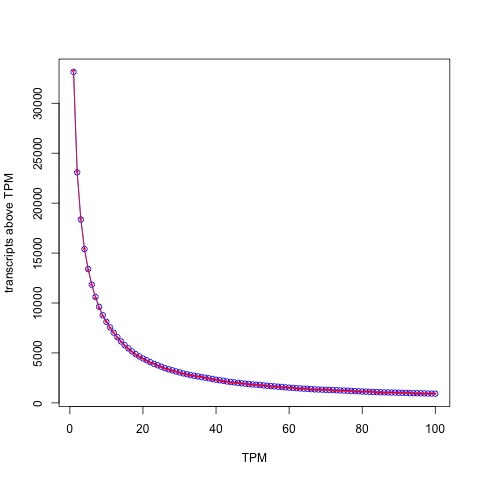

Patro et al. utilized TPM based comparisons for all the results in their paper, presumably to highlight the improvements in accuracy resulting from better effective length estimates. Numerous results in the paper suggest that Salmon is much more accurate than kallisto. However I had seen a figure in Majoros et al. 2017 that examined the (cumulative) distribution of both kallisto and Salmon abundances in TPM units (their Supplementary Figure 5) in which the curves literally overlapped at almost all thresholds:

The plot above was made with Salmon v0.7.2 so in fairness to Patro et al. I remade it using the ERR188140 dataset mentioned above with Salmon v0.8.2:

The distribution of abundances (in TPM units) as estimated by kallisto (blue circles) and Salmon (red stars).

The blue circles correspond to kallisto and the red stars inside to Salmon. With the latest version of Salmon the similarity is even higher than what Majoros et al. observed! The Spearman correlation between kallisto and Salmon with TPM units is 0.9899896.

It’s interesting to examine what this means for a (truly) typical TPM analysis. One way that TPMs are used is to filter transcripts (or genes) by some threshold, typically TPM > 1 (in another deviation from “typical”, a key table in Patro et al. 2017 – Figure 1d – is made by thresholding with TPM > 0.1). The Venn diagram below shows the differences between the programs at the typical TPM > 1 threshold:

A Venn diagram showing the overlap in transcripts predicted by kallisto and Salmon to have estimated abundance > 1 TPM.

The figures above were made with Salmon 0.8.2 run in default mode. The correlation between kallisto and Salmon (in TPM) units decreases a tiny amount, from 0.9989224 to 0.9974325 with the –gcBias option and even the Spearman correlation decreases by only 0.011 from 0.9899896 to 0.9786092.

I think it’s perfectly fine for authors to present their work in the best light possible. What is not ok is to deliberately hide important and relevant truth, which in this case is that Salmon kallisto.

A note on speed

One of the claims in Patro et al. 2017 is that “[the speed of Salmon] roughly matches the speed of the recently introduced kallisto.” The Salmon claim is based on a benchmark of an experiment (details unknown) with 600 million 75bp paired-end reads using 30 threads. Below are the results of a similar benchmark of Salmon showing time to process 19 samples from Boj et al. 2015 with variable numbers of threads:

First, Salmon with –gcBias is considerably slower than default Salmon. Furthermore, there is a rapid decrease in performance gain with increasing number of threads, something that should come as no surprise. It is well known that quantification can be I/O bound which means that at some point, extra threads don’t provide any gain as the disk starts grinding limiting access from the CPUs. So why did Patro et al. choose to benchmark runtime with 30 threads?

The figure below provides a possible answer:

In other words, not only is Salmon kallisto in accuracy, but contrary to the claims in Patro et al. 2017, kallisto is faster. This result is confirmed in Table 1 of Sarkar et al. 2017 who find that Salmon is slower by roughly the same factor as seen above (in the table “quasi-mapping” is Salmon).

Having said that, the speed differences between kallisto and Salmon should not matter much in practice and large scale projects made possible with kallisto (e.g. Vivian et al. 2017) are possible with Salmon as well. Why then did the authors not report their running time benchmarks honestly?

The first common notion

The Patro et al. 2017 paper uses the term “quasi-mapping” to describe an algorithm, published in Srivastava et al. 2016, for obtaining their (what turned out to be near identical to kallisto) results. I have written previously how “quasi-mapping” is the same as pseudoalignment as an alignment concept, even though Srivastava et al. 2016 initially implemented pseudoalignment differently than the way we described it originally in our preprint in Bray et al. 2015. However the reviewers of Patro et al. 2017 may be forgiven for assuming that “quasi-mapping” is a technical advance over pseudoalignment. The Srivastava et al. paper is dense and filled with complex technical detail. Even for an expert in alignment/RNA-Seq it is not easy to see from a superficial reading of the paper that “quasi-mapping” is an equivalent concept to kallisto’s pseudoalignment (albeit implemented with suffix arrays instead of a de Bruijn graph). Nevertheless, the key to the paper is a simple sentence: “Specifically, the algorithm [RapMap, which is now used in Salmon] reports the intersection of transcripts appearing in all hits” in the section 2.1 of the paper. That’s the essence of pseudoalignment right there. The paper acknowledges as much, “This lightweight consensus mechanism is inspired by Kallisto ( Bray et al. , 2016 ), though certain differences exist”. Well, as shown above, those differences appear to have made no difference in standard practice, except insofar as the Salmon implementation of pseudoalignment being slower than the one in Bray et al. 2016.

Srivastava et al. 2016 and Patro et al. 2017 make a fuss about the fact that their “quasi-mappings” take into account the starting positions of reads in transcripts, thereby including more information than a “pure” pseudoalignment. This is a pedantic distinction Patro et al. are trying to create. Already in the kallisto preprint (May 11, 2015), it was made clear that this information was trivially accessible via a reasonable approach to pseudoalignment: “Once the graph and contigs have been constructed, kallisto stores a hash table mapping each k-mer to the contig it is contained in, along with the position within the contig.”

In other words, Salmon is not producing near identical results to kallisto due to an unprecedented cosmic coincidence. The underlying method is the same. I leave it to the reader to apply Euclid’s first common notion:

Things which equal the same thing are also equal to each other.

Convergence

While Salmon is now producing almost identical output to kallisto and is based on the same principles and methods, this was not the case when the program was first released. The history of the Salmon program is accessible via the GitHub repository, which recorded changes to the code, and also via the bioRxiv preprint server where the authors published three versions of the Salmon preprint prior to its publication in Nature Methods.

The first preprint was published on the BioRxiv on June 27, 2015. It followed shortly on the heels of the kallisto preprint which was published on May 11, 2015. However the first Salmon preprint described a program very different from kallisto. Instead of pseudoalignment, Salmon relied on chaining SMEMs (super-maximal exact matches) between reads and transcripts to identifying what the authors called “approximately consistent co-linear chains” as proxies for alignments of reads to transcripts. The authors then compared Salmon to kallisto writing that “We also compare with the recently released method of Kallisto which employs an idea similar in some respects to (but significantly different than) our lightweight-alignment algorithm and again find that Salmon tends to produce more accurate estimates in general, and in particular is better able [to] estimate abundances for multi-isoform genes.” In other words, in 2015 Patro et al. claimed that Salmon was “better” than kallisto. If so, why did the authors of Salmon later change the underlying method of their program to pseudoalignment from SMEM alignment?

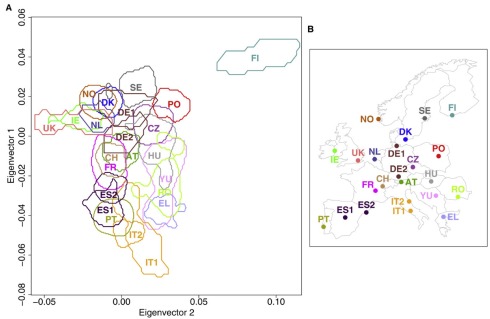

Inspired by temporal ordering analysis of expression data and single-cell pseudotime analysis, I ran all the versions of kallisto and Salmon on ERR188140, and performed PCA on the resulting transcript abundance table to be able to visualize the progression of the programs over time. The figure below shows all the points with the exception of three: Sailfish 0.6.3, kallisto 0.42.0 and Salmon 0.32.0. I removed Sailfish 0.6.3 because it is such an outlier that it caused all the remaining points to cluster together on one side of the plot (the figure is below in the next section). In fairness I also removed one Salmon point (version 0.32.0) because it differed substantially from version 0.4.0 that was released a few weeks after 0.32.0 and fixed some bugs. Similarly, I removed kallisto 0.42.0, the first release of kallisto which had some bugs that were fixed 6 days later in version 0.42.1.

Evidently kallisto output has changed little since May 12, 2015. Although some small bugs were fixed and features added, the quantifications have been very similar. The quantifications have been stable because the algorithm has been the same.

On the other hand the Salmon trajectory shows a steady convergence towards kallisto. The result everyone is finding, namely that currently Salmon kallisto is revealed by the clustering of recent versions of Salmon near kallisto. However the first releases of Salmon are very different from kallisto. This is also clear from the heatmap/hierarchical clustering of Sahraeian et al. in which Salmon-SMEM was included (Salmon used SMEMs until version 0.5.1, sometimes labeled fmd, until “quasi-mapping” became the default). A question: if Salmon ca. 2015 was truly better than kallisto then is Salmon ca. 2017 worse than Salmon ca. 2015?

Convergence of Salmon and Sailfish to kallisto over the course of a year. The x-axis labels the time different versions of each program were released. The y-axis is PC1 from a PCA of transcript abundances of the programs.

Prestamping

The bioRxiv preprint server provides a feature by which a preprint can be linked to its final form in a journal. This feature is useful to readers of the bioRxiv, as final published papers are generally improved after preprint reader, reviewer, and editor comments have been addressed. Journal linking is also a mechanism for authors to time stamp their published work using the bioRxiv. However I’m sure the bioRxiv founders did not intend the linking feature to be abused as a “prestamping” mechanism, i.e. a way for authors to ex post facto receive a priority date for a published paper that had very little, or nothing, in common with the original preprint.

A comparison of the June 2015 preprint mentioning the Salmon program and the current Patro et al. paper reveals almost nothing in common. The initial method changed drastically in tandem with an update to the preprint on October 3, 2015 at which point the Salmon program was using “quasi mapping”, later published in Srivastava et al. 2016. Last year I met with Carl Kingsford (co-corresponding author of Patro et al. 2017) to discuss my concern that Salmon was changing from a method distinct from that of kallisto (SMEMs of May 2015) to one that was replicating all the innovations in kallisto, without properly disclosing that it was essentially a clone. Yet despite a promise that he would raise my concerns with the Salmon team, I never received a response.

At this point, the Salmon core algorithms have changed completely, the software program has changed completely, and the benchmarking has changed completely. The Salmon project of 2015 and the Salmon project of 2017 are two very different projects although the name of the program is the same. While some features have remained, for example the Salmon mode that processes transcriptome alignments (similar to eXpress) was present in 2015, and the approach to likelihood maximization has persisted, considering the programs the same is to descend into Theseus’ paradox.

Interestingly, Patro specifically asked to have the Salmon preprint linked to the journal:

The linking of preprints to journal articles is a feature that arXiv does not automate, and perhaps wisely so. If bioRxiv is to continue to automatically link preprints to journals it needs to focus not only on eliminating false negatives but also false positives, so that journal linking cannot be abused by authors seeking to use the preprint server to prestamp their work after the fact.

The fish always win?

The Sailfish program was the precursor of Salmon, and was published in Patro et al. 2014. At the time, a few students and postdocs in my group read the paper and then discussed it in our weekly journal club. It advocated a philosophy of “lightweight algorithms, which make frugal use of data, respect constant factors and effectively use concurrent hardware by working with small units of data where possible”. Indeed, two themes emerged in the journal club discussion:

1. Sailfish was much faster than other methods by virtue of being simpler.

2. The simplicity was to replace approximate alignment of reads with exact alignment of k-mers. When reads are shredded into their constituent k-mer “mini-reads”, the difficult read -> reference alignment problem in the presence of errors becomes an exact matching problem efficiently solvable with a hash table.

Despite the claim in the Sailfish abstract that “Sailfish provides quantification time…without loss of accuracy” and Figure 1 from the paper showing Sailfish to be more accurate than RSEM, we felt that the shredding of reads must lead to reduced accuracy, and we quickly checked and found that to be the case; this was later noted by others, e.g. Hensman et al. 2015, Lee et al. 2015).

After reflecting on the Sailfish paper and results, Nicolas Bray had the key idea of abandoning alignments as a requirement for RNA-Seq quantification, developed pseudoalignment, and later created kallisto (with Harold Pimentel and Páll Melsted).

I mention this because after the publication of kallisto, Sailfish started changing along with Salmon, and is now frequently discussed in the context of kallisto and Salmon as an equal. Indeed, the PCA plot above shows that (in its current form, v0.10.0) Sailfish is also nearly identical to kallisto. This is because with the release of Sailfish 0.7.0 in September 2015, Patro et al. started changing the Sailfish approach to use pseudoalignment in parallel with the conversion of Salmon to use pseudoalignment. To clarify the changes in Sailfish, I made the PCA plot below which shows where the original version of Sailfish that coincided with the publication of Patro et al. 2014 (version 0.6.3 March 2014) lies relative to the more recent versions and to Salmon:

In other words, despite a series of confusing statements on the Sailfish GitHub page and an out-of-date description of the program on its homepage, Sailfish in its published form was substantially less accurate and slower than kallisto, and in its current form Sailfish is kallisto.

In other words, despite a series of confusing statements on the Sailfish GitHub page and an out-of-date description of the program on its homepage, Sailfish in its published form was substantially less accurate and slower than kallisto, and in its current form Sailfish is kallisto.

In retrospect, the results in Figure 1 of Patro et al. 2014 seem to be as problematic as the results in Figure 1 of Patro et al. 2017. Apparently crafting computational experiments via biased simulations and benchmarks to paint a distorted picture of performance is a habit of Patro et al.

Addendum [August 5, 2017]

In the post I wrote that “The history of the Salmon program is accessible via the GitHub repository, which recorded changes to the code, and also via the bioRxiv preprint server where the authors published three versions of the Salmon preprint prior to its publication in Nature Methods” Here are the details of how these support the claims I make (tl;dr https://twitter.com/yarbsalocin/status/893886707564662784):

Sailfish (current version) and Salmon implemented kallisto’s pseudoalignment algorithm using suffix arrays

First, both Sailfish and Salmon use RapMap (via `SACollector`) and call `mergeLeftRightHits()`:

Sailfish:

https://github.com/kingsfordgroup/sailfish/blob/352f9001a442549370eb39924b06fa3140666a9e/src/SailfishQuantify.cpp#L192

Salmon:

https://github.com/COMBINE-lab/salmon/commit/234cb13d67a9a1b995c86c8669d4cefc919fbc87#diff-594b6c23e3bdd02a14cc1b861c812b10R2205

The RapMap code for “quasi mapping” executes an algorithm identical to psuedoalignment, down to the detail of what happens to the k-mers in a single read:

First, `hitCollector()` calls `getSAHits_()`:

https://github.com/COMBINE-lab/RapMap/blob/bd76ec5c37bc178fd93c4d28b3dd029885dbe598/include/SACollector.hpp#L249

Here kmers are used hashed to SAintervals (Suffix Array intervals), that are then extended to see how far ahead to jump. This is the one of two key ideas in the kallisto paper, namely that not all the k-mers in a read need to be examined to pseudoalign the read. It’s much more than that though, it’s the actual exact same algorithm to the level of exactly the k-mers that are examined. kallisto performs this “skipping” using contig jumping with a different data structure (the transcriptome de Bruijn graph) but aside from data structure used what happens is identical:

https://github.com/COMBINE-lab/RapMap/blob/c1e3132a2e136615edbb91348781cb71ba4c22bd/include/SACollector.hpp#L652

makes a call to jumping and the code to compute MMP (skipping) is

https://github.com/COMBINE-lab/RapMap/blob/c1e3132a2e136615edbb91348781cb71ba4c22bd/include/SASearcher.hpp#L77

There is a different detail in the Sailfish/Salmon code which is that when skipping forward the suffix array is checked for exact matching on the skipped sequence. kallisto does not have this requirement (although it could). On error-free data these will obviously be identical; on error prone data this may make Salmon/Sailfish a bit more conservative and kallisto a bit more robust to error. Also due to the structure of suffix arrays there is a possible difference in behavior when a transcript contains a repeated k-mer. These differences affect a tiny proportion of reads, as is evident from the result that kallisto and Salmon produce near identical results.

The second key idea in kallisto of intersecting equivalence classes for a read. This exact procedure is in:

https://github.com/COMBINE-lab/RapMap/blob/bd76ec5c37bc178fd93c4d28b3dd029885dbe598/include/SACollector.hpp#L363

which calls:

https://github.com/COMBINE-lab/RapMap/blob/bd76ec5c37bc178fd93c4d28b3dd029885dbe598/src/HitManager.cpp#L599

There was a choice we had to make in kallisto of how to handle information from paired end reads (does one require consistent pseudoalignment in both? Just one suffices to pseudoalign a read?)

The code for intersection between left and right reads making the identical choices as kallisto is:

https://github.com/COMBINE-lab/RapMap/blob/bd76ec5c37bc178fd93c4d28b3dd029885dbe598/include/RapMapUtils.hpp#L810

In other words, stepping through what happens to the k-mers in a read shows that Sailfish/Salmon copied the algorithms of kallisto and implemented it with the only difference being a different data structure used to hash the kmers. This is why, when I did my run of Salmon vs. kallisto that led to this blog post I found that

kallisto pseudoaligned 69,780,930 reads

vs

salmon 69,701,169.

That’s a difference of 79,000 out of ~70 million = 0.1%.

Two additional points:

- Until the kallisto program and preprint was published Salmon used SMEMs. Only after kallisto does Salmon change to using kmer cached suffix array intervals.

- The kallisto preprint did not discuss outputting position as part of pseudoalignment because it was not central to the idea. It’s trivial to report pseudoalignment positions with either data structure and in fact both kallisto and Salmon do.

I want to make very clear here that I think there can be great value in implementing an algorithm with a different data structure. It’s a form of reproducibility that one can learn from: how to optimize, where performance gains can be made, etc. Unfortunately most funding agencies don’t give grants for projects whose goal is solely to reproduce someone else’s work. Neither do most journal publish papers that set out to do that. That’s too bad. If Patro et al. had presented their work honestly, and explained that they were implementing pseudoalignment with a different data structure to see if it’s better, I’d be a champion of their work. That’s not how they presented their work.

Salmon copied details in the quantification

The idea of using the EM algorithm for quantification with RNA-Seq goes back to Jiang and Wong, 2009, arguably even to Xing et al. 2006. I wrote up the details of the history in a review in 2011 that is on the arXiv. kallisto runs the EM algorithm on equivalence classes, an idea that originates with Nicolae et al. 2011 (or perhaps even Jiang and Wong 2009) but whose significance we understood from the Sailfish paper (Patro et al. 2014). Therefore the fact that Salmon (now) and kallisto both use the EM algorithm, in the same way, makes sense.

However Salmon did not use the EM algorithm before the kallisto preprint and program were published. It used an online variational Bayes algorithm instead. In the May 18, 2015 release of Salmon there is no mention of EM. Then, with the version 0.4 release date Salmon suddenly switches to the EM. In implementing the EM algorithm there are details that must be addressed, for example setting thresholds for when to terminate rounds of inference based on changes in the (log) likelihood (i.e. determine convergence).

For example, kallisto sets parameters

const double alpha_limit = 1e-7;

const double alpha_change_limit = 1e-2;

const double alpha_change = 1e-2;

in EMalgorithm.h

https://github.com/pachterlab/kallisto/blob/90db56ee8e37a703c368e22d08b692275126900e/src/EMAlgorithm.h

The link above shows that these kallisto parameters were set and have not changed since the release of kallisto

Also they were not always this way, see e.g. the version of April 6, 2015:

https://github.com/pachterlab/kallisto/blob/2651317188330f7199db7989b6a4dc472f5d1669/src/EMAlgorithm.h

This is because one of the things we did is explore the effects of these thresholds, and understand how setting them affects performance. This can be seen also in a legacy redundancy, we have both alpha_change and alpha_change_limit which ended up being unnecessary because they are equal in the program and used on one line.

The first versions of Salmon post-kallisto switched to the EM, but didn’t even terminate it the same way as kallisto, adopting instead a maximum iteration of 1,000. See

https://github.com/COMBINE-lab/salmon/blob/59bb9b2e45c76137abce15222509e74424629662/include/CollapsedEMOptimizer.hpp

from May 30, 2015.

This changed later first with the introduction of minAlpha (= kallisto’s alpha_limit)

https://github.com/COMBINE-lab/salmon/blob/56120af782a126c673e68c8880926f1e59cf1427/src/CollapsedEMOptimizer.cpp

and then alphaCheckCutoff (kallisto’s alpha_change_limit)

https://github.com/COMBINE-lab/salmon/blob/a3bfcf72e85ebf8b10053767b8b506280a814d9e/src/CollapsedEMOptimizer.cpp

Here are the salmon thresholds:

double minAlpha = 1e-8;

double alphaCheckCutoff = 1e-2;

double cutoff = minAlpha;

Notice that they are identical except that minAlpha = 1e-8 and not kallisto’s alpha_limit = 1e-7. However in kallisto, from the outset, the way that alpha_limit has been used is:

if (alpha_[ec] < alpha_limit/10.0) {

alpha_[ec] = 0.0;

}

In other words, alpha_limit in kallisto is really 1e-8, and has been all along.

The copying of all the details of our program have consequences for performance. In the sample I ran kallisto performed 1216 EM rounds of EM vs. 1214 EM rounds in Salmon.

Sailfish (current version) copied our sequence specific bias method

One of the things we did in kallisto is implement a sequence specific bias correction along the lines of what was done previously in Roberts et al. 2011, and later in Roberts et al. 2013. Implementing sequence specific bias correction in kallisto required working things out from scratch because of the way equivalence classes were being used with the EM algorithm, and not reads. I worked this out together with Páll Melsted during conversations that lasted about a month in the Spring of 2015. We implemented it in the code although did not release details of how it worked with the initial preprint because it was an option and not default, and we thought we might want to still change it before submitting the journal paper.

Here Rob is stating that Salmon can account for biases that kallisto cannot:

https://www.biostars.org/p/143458/#143639

This was a random forest bias correction method different from kallisto’s.

Shortly thereafter, here is the source code in Sailfish deprecating the Salmon bias correction and switching to kallisto’s method:

https://github.com/kingsfordgroup/sailfish/commit/377f6d65fe5201f7816213097e82df69e4786714#diff-fe8a1774cd7c858907112e6c9fda1e9dR76

This is the update to effective length in kallisto:

https://github.com/pachterlab/kallisto/blob/e5957cf96f029be4e899e5746edcf2f63e390609/src/weights.cpp#L184

Here is the Sailfish code:

https://github.com/kingsfordgroup/sailfish/commit/be0760edce11f95377088baabf72112f920874f9#diff-8341ac749ad4ac5cfcc8bfef0d6f1efaR796

Notice that there has been a literal copying down to the variable names:

https://github.com/kingsfordgroup/sailfish/commit/be0760edce11f95377088baabf72112f920874f9#diff-8341ac749ad4ac5cfcc8bfef0d6f1efaR796

The code written by the student of Rob was:

effLength *=alphaNormFactor/readNormFactor;

The code written by us is

efflen *= 0.5*biasAlphaNorm/biasDataNorm;

The code rewritten by Rob (editing that of the student):

effLength *= 0.5 * (txomeNormFactor / readNormFactor);

Note that since our bias correction method was not reported in our preprint, this had to have been copied directly from our codebase and was done so without any attribution.

I raised this specific issue with Carl Kingsford by email prior to our meeting in April 13 2016. We then discussed it in person. The conversation and email were prompted by a change to the Sailfish README on April 7, 2016 specifically accusing us of comparing kallisto to a “ **very old** version of Sailfish”:

https://github.com/kingsfordgroup/sailfish/commit/550cd19f7de0ea526f512a5266f77bfe07148266

What was stated is “The benchmarks in the kallisto paper *are* made against a very old version of Sailfish” not “were made against”. By the time that was written, it might well have been true. But kallisto was published in May 2015, it benchmarked with the Sailfish program described in Patro et al. 2014, and by 2016 Sailfish had changed completely implementing the pseudoalignment of kallisto.

Token attribution

Another aspect of an RNA-Seq quantification program is effective length estimation. There is an attribution to kallisto in the Sailfish code now explaining that this is from kallisto:

“Computes (and returns) new effective lengths for the transcripts based on the current abundance estimates (alphas) and the current effective lengths (effLensIn). This approach is based on the one taken in Kallisto

https://github.com/kingsfordgroup/sailfish/blob/b1657b3e8929584b13ad82aa06060ce1d5b52aed/src/SailfishUtils.cpp

This is from January 23rd, 2016, almost 9 months after kallisto was released, and 3 months before the Sailfish README accused us of not testing the latest version of Sailfish in May 2015.

The attribution for effective lengths is also in the Salmon code, from 6 months later June 2016:

https://github.com/COMBINE-lab/salmon/blob/335c34b196205c6aebe4ddcc12c380eb47f5043a/include/DistributionUtils.hpp

There is also an acknowledgement in the Salmon code that a machine floating point tolerance we use

https://github.com/pachterlab/kallisto/blob/master/src/EMAlgorithm.h#L19

was copied.

The acknowledgment in Salmon is here

https://github.com/COMBINE-lab/salmon/blob/a3bfcf72e85ebf8b10053767b8b506280a814d9e/src/CollapsedEMOptimizer.cpp

This is the same file where the kallisto thresholds for the EM were copied to.

So after copying our entire method, our core algorithm, many of our ideas, specific parameters, and numerous features… really just about everything that goes into an RNA-Seq quantification project, there is an acknowledgment that our machine tolerance threshold was “intelligently chosen”.

Recent news of James Watson’s auction of his Nobel Prize medal has unearthed a very unpleasant memory for me.

In March 2004 I attended an invitation-only genomics meeting at the famed Banbury Center at Cold Spring Harbor Laboratory. I had heard legendary stories about Banbury, and have to admit I felt honored and excited when I received the invitation. There were rumors that sometimes James Watson himself would attend meetings. The emails I received explaining the secretive policies of the Center only added to the allure. I felt that I had received an invitation to the genomics equivalent of Skull and Bones.

Although Watson did not end up attending the meeting, my high expectations were met when he did decide to drop in on dinner one evening at Robertson house. Without warning he seated himself at my table. I was in awe. The table was round with seating for six, and Honest Jim sat down right across from me. He spoke incessantly throughout dinner and we listened. Sadly though, most of the time he was spewing racist and misogynistic hate. I remember him asking rhetorically “who would want to adopt an Irish kid?” (followed by a tirade against the Irish that I later saw repeated in the news) and he made a point to disparage Rosalind Franklin referring to her derogatorily as “that woman”. No one at the table (myself included) said a word. I deeply regret that.

One of Watson’s obsessions has been to “improve” the “imperfect human” via human germline engineering. This is disturbing on many many levels. First, there is the fact that for years Watson presided over Cold Spring Harbor Laboratory which actually has a history as a center for eugenics. Then there are the numerous disparaging remarks by Watson about everyone who is not exactly like him, leaving little doubt about who he imagines the “perfect human” to be. But leaving aside creepy feelings… could he be right? Is the “perfect human” an American from Chicago of mixed Scottish/Irish ancestry? Should we look forward to a world filled with Watsons? I have recently undertaken a thought experiment along these lines that I describe below. The result of the experiment is dedicated to James Watson on the occasion of his unbirthday today.

Introduction

SNPedia is an open database of 59,593 SNPs and their associations. A SNP entry includes fields for “magnitude” (a subjective measure of significance on a scale of 0–10) and “repute” (good or bad), and allele classifications for many diseases and medical conditions. For example, the entry for a SNP (rs1799971) that associates with alcohol cravings describes the “normal” and “bad” alleles. In addition to associating with phenotypes, SNPs can also associate with populations. For example, as seen in the Geography of Genetic Variants Browser, rs1799971 allele frequencies vary greatly among Africans, Europeans and Asians. If the genotype of an individual is known at many SNPs, it is therefore possible to guess where they are from: in the case of rs1799971 someone who is A:A is a lot more likely to be African than Japanese, and with many SNPs the probabilities can narrow the location of an individual to a very specific geographic location. This is the principle behind the application of principal component analysis (PCA) to the study of populations. Together, SNPedia and PCA therefore provide a path to determining where a “perfect human” might be from:

- Create a “perfect human” in silico by setting the alleles at all SNPs so that they are “good”.

- Add the “perfect human” to a panel of genotyped individuals from across a variety of populations and perform PCA to reveal the location and population of origin of the individual.

Results

After restricting the SNP set from SNPedia to those with green painted alleles, i.e. “good”, there are 4967 SNPs with which to construct the “perfect human” (available for download here).

A dataset of genotyped individuals can be obtain from 1000 genomes including Africans, (indigenous) Americans, East Asians and Europeans.

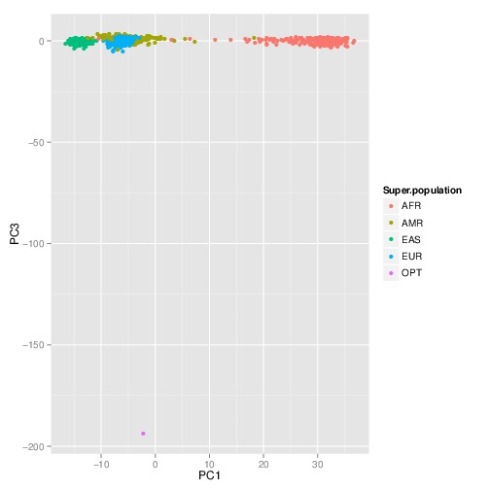

The PCA plot (1st and 2nd components) showing all the individuals together with the “perfect human” (in pink; see arrow) is shown below:

The nearest neighbor to the “perfect human” is HG00737, a female who is… Puerto Rican. One might imagine that such a person already existed, maybe Yuiza, the only female Taino Cacique (chief) in Puerto Rico’s history:

But as the 3rd principal component shows, reifying the “perfect human” is a misleading undertaking:

Here the “perfect human” is revealed to be decidedly non-human. This is not surprising, and it reflects the fact that the alleles of the “perfect human” place it as significant outlier to the human population. In fact, this is even more evident in the case of the “worst human”, namely the individual that has the “bad” alleles at every SNPs. A projection of that individual onto any combination of principal components shows them to be far removed from any actual human. The best visualization appears in the projection onto the 2nd and 3rd principal components, where they appear as a clear outlier (point labeled DYS), and diametrically opposite to Africans:

The fact that the “worst human” does not project well onto any of the principal components whereas the “perfect human” does is not hard to understand from basic population genetics principles. It is an interesting exercise that I leave to the reader.

Conclusion

The fact that the “perfect human” is Puerto Rican makes a lot of sense. Since many disease SNPs are population specific, it makes sense that an individual homozygous for all “good” alleles should be admixed. And that is exactly what Puerto Ricans are. In a “women in the diaspora” study, Puerto Rican women born on the island but living in the United States were shown to be 53.3±2.8% European, 29.1±2.3% West African, and 17.6±2.4% Native American. In other words, to collect all the “good” alleles it is necessary to be admixed, but admixture itself is not sufficient for perfection. On a personal note, I was happy to see population genetic evidence supporting my admiration for the perennial championship Puerto Rico All Stars team:

As for Watson, it seems fitting that he should donate the proceeds of his auction to the Caribbean Genome Center at the University of Puerto Rico.

[Update: Dec. 7/8: Taras Oleksyk from the Department of Biology at the University of Puerto Rico Mayaguez has written an excellent post-publication peer review of this blog post and Rafael Irizarry from the Harvard School of Public Health has written a similar piece, Genéticamente, no hay tal cosa como la raza puertorriqueña in Spanish. Both are essential reading.]

Nature Publishing Group claims on its website that it is committed to publishing “original research” that is “of the highest quality and impact”. But when exactly is research “original”? This is a question with a complicated answer. A recent blog post by senior editor Dorothy Clyde at Nature Protocols provides insight into the difficulties Nature faces in detecting plagiarism, and identifies the issue of self plagiarism as particularly problematic. The journal tries to avoid publishing the work of authors who have previously published the same work or a minor variant thereof. I imagine this is partly in the interests of fairness, a service to the scientific community to ensure that researchers don’t have to sift through numerous variants of a single research project in the literature, and a personal interest of the journal in its aim to publish only the highest level of scholarship.

On the other hand, there is also a rationale for individual researchers to revisit their own previously published work. Sometimes results can be recast in a way that makes them accessible to different communities, and rethinking of ideas frequently leads to a better understanding, and therefore a better exposition. The mathematician Gian-Carlo Rota made the case for enlightened self-plagiarism in one of his ten lessons he wished he had been taught when he was younger:

3. Publish the same result several times

After getting my degree, I worked for a few years in functional analysis. I bought a copy of Frederick Riesz’ Collected Papers as soon as the big thick heavy oversize volume was published. However, as I began to leaf through, I could not help but notice that the pages were extra thick, almost like cardboard. Strangely, each of Riesz’ publications had been reset in exceptionally large type. I was fond of Riesz’ papers, which were invariably beautifully written and gave the reader a feeling of definitiveness.

As I looked through his Collected Papers however, another picture emerged. The editors had gone out of their way to publish every little scrap Riesz had ever published. It was clear that Riesz’ publications were few. What is more surprising is that the papers had been published several times. Riesz would publish the first rough version of an idea in some obscure Hungarian journal. A few years later, he would send a series of notes to the French Academy’s Comptes Rendus in which the same material was further elaborated. A few more years would pass, and he would publish the definitive paper, either in French or in English. Adam Koranyi, who took courses with Frederick Riesz, told me that Riesz would lecture on the same subject year after year, while meditating on the definitive version to be written. No wonder the final version was perfect.

Riesz’ example is worth following. The mathematical community is split into small groups, each one with its own customs, notation and terminology. It may soon be indispensable to present the same result in several versions, each one accessible to a specific group; the price one might have to pay otherwise is to have our work rediscovered by someone who uses a different language and notation, and who will rightly claim it as his own.

The question is: where does one draw the line?

I was recently forced to confront this question when reading an interesting paper about a statistical approach to utilizing controls in large-scale genomics experiments:

J.A. Gagnon-Bartsch and T.P. Speed, Using control genes to corrected for unwanted variation in microarray data, Biostatistics, 2012.

A cornerstone in the logic and methodology of biology is the notion of a “control”. For example, when testing the result of a drug on patients, a subset of individuals will be given a placebo. This is done to literally control for effects that might be measured in patients taking the drug, but that are not inherent to the drug itself. By examining patients on the placebo, it is possible to essentially cancel out uninteresting effects that are not specific to the drug. In modern genomics experiments that involve thousands, or even hundreds of thousands of measurements, there is a biological question of how to design suitable controls, and a statistical question of how to exploit large numbers of controls to “normalize” (i.e. remove unwanted variation) from the high-dimensional measurements.

Formally, one framework for thinking about this is a linear model for gene expression. Using the notation of Gagnon-Bartsch & Speed, we have an expression matrix of size

(m samples and n genes) modeled as

.

Here X is a matrix describing various conditions (also called factors) and associated to it is the parameter matrix that records the contribution, or influence, of each factor on each gene.

is the primary parameter of interest to be estimated from the data Y. The

are random noise, and finally Z and W are observed and unobserved covariates respectively. For example Z might encode factors for covariates such as gender, whereas W would encode factors that are hidden, or unobserved. A crucial point is that the number of hidden factors in W, namely k, is not known. The matrices

and

record the contributions of the Z and W factors on gene expression, and must also be estimated. It should be noted that X may be the logarithm of expression levels from a microarray experiment, or the analogous quantity from an RNA-Seq experiment (e.g. log of abundance in FPKM units).

Linear models have been applied to gene expression analysis for a very long time; I can think of papers going back 15 years. But They became central to all analysis about a decade ago, specifically popularized with the Limma package for microarray data analysis. In an important paper in 2007, Leek and Storey focused explicitly on the identification of hidden factors and estimation of their influence, using a method called SVA (Surrogate Variable Analysis). Mathematically, they described a procedure for estimating k and W and the parameters . I will not delve into the details of SVA in this post, except to say that the overall idea is to first perform linear regression (assuming no hidden factors) to identify the parameters

and to then perform singular value decomposition (SVD) on the residuals to identify hidden factors (details omitted here). The resulting identified hidden factors (and associated influence parameters) are then used in a more general model for gene expression in subsequent analysis.

Gagnon-Bartsch and Speed refine this idea by suggesting that it is better to infer W from controls. For example, house-keeping genes that are unlikely to correlate with the conditions being tested, can be used to first estimate W, and then subsequently all the parameters of the model can be estimated by linear regression. They term this two-step process RUV-2 (acronym for Remote Unwanted Variation) where the “2” designates that the procedure is a two-step procedure. As with SVA, the key to inferring W from the controls is to perform singular value decomposition (or more generally factor analysis). This is actually clear from the probabilistic interpretation of PCA and the observation that what it means to be a in the set of “control genes” C in a setting where there are no observed factors Z, is that

.

That is, for such control genes the corresponding parameters are zero. This is a simple but powerful observation, because the explicit designation of control genes in the procedure makes it clear how to estimate W, and therefore the procedure becomes conceptually compelling and practically simple to implement. Thus, even though the model being used is the same as that of Leek & Storey, there is a novel idea in the paper that makes the procedure “cleaner”. Indeed, Gagnon-Bartsch & Speed provide experimental results in their paper showing that RUV-2 outperforms SVA. Even more convincing, is the use of RUV-2 by others. For example, in a paper on “The functional consequences of variation in transcription factor binding” by Cusanovitch et al., PLoS Genetics 2014, RUV-2 is shown to work well, and the authors explain how it helps them to take advantage of the controls in experimental design they created.

There is a tech report and also a preprint that follow up on the Gagnon-Bartsch & Speed paper; the tech report extends RUV-2 to a four step method RUV-4 (it also provides a very clear exposition of the statistics), and separately the preprint describes an extension to RUV-2 for the case where the factor of interest is also unknown. Both of these papers build on the original paper in significant ways and are important work, that to return to the original question in the post, certainly are on the right side of “the line”

The wrong side of the line?

The development of RUV-2 and SVA occurred in the context of microarrays, and it is natural to ask whether the details are really different for RNA-Seq (spoiler: they aren’t). In a book chapter published earlier this year:

D. Risso, J. Ngai, T.P. Speed, S. Dudoit, The role of spike-in standards in the normalization of RNA-Seq, in Statistical Analysis of Next Generation Sequencing Data (2014), 169-190.

the authors replace “log expression levels” from microarrays with “log counts” from RNA-Seq and the linear regression performed with Limma for RUV-2 with a Poisson regression (this involves one different R command). They call the new method RUV, which is the same as the previously published RUV, a naming convention that makes sense since the paper has no new method. In fact, the mathematical formulas describing the method are identical (and even in almost identical notation!) with the exception that the book chapter ignores Z altogether, and replaces with O.

To be fair, there is one added highlight in the book chapter, namely the observation that spike-ins can be used in lieu of housekeeping (or other control) genes. The method is unchanged, of course. It is just that the spike-ins are used to estimate W. Although spike-ins were not mentioned in the original Gagnon-Bartsch paper, there is no reason not to use them with arrays as well; they are standard with Affymetrix arrays.

My one critique of the chapter is that it doesn’t make sense to me that counts are used in the procedure. I think it would be better to use abundance estimates, and in fact I believe that Jeff Leek has already investigated the possibility in a preprint that appears to be an update to his original SVA work. That issue aside, the book chapter does provide concrete evidence using a Zebrafish experiment that RUV-2 is relevant and works for RNA-Seq data.

The story should end here (and this blog post would not have been written if it had) but two weeks ago, among five RNA-Seq papers published in Nature Biotechnology (I have yet to read the others), I found the following publication:

D. Risso, J. Ngai, T.P. Speed, S. Dudoit, Normalization of RNA-Seq data using factor analysis of control genes or samples, Nature Biotechnology 32 (2014), 896-902.

This paper has the same authors as the book chapter (with the exception that Sandrine Dudoit is now a co-corresponding author with Davide Risso, who was the sole corresponding author on the first publication), and, it turns out, it is basically the same paper… in fact in many parts it is the identical paper. It looks like the Nature Biotechnology paper is an edited and polished version of the book chapter, with a handful of additional figures (based on the same data) and better graphics. I thought that Nature journals publish original and reproducible research papers. I guess I didn’t realize that for some people “reproducible” means “reproduce your own previous research and republish it”.

At this point, before drawing attention to some comparisons between the papers, I’d like to point out that the book chapter was refereed. This is clear from the fact that it is described as such in both corresponding authors’ CVs.

How similar are the two papers?

Final paragraph of paper in the book:

Internal and external controls are essential for the analysis of high-throughput data and spike-in sequences have the potential to help researchers better adjust for unwanted technical effects. With the advent of single-cell sequencing [35], the role of spike-in standards should become even more important, both to account for technical variability [6] and to allow the move from relative to absolute RNA expression quantification. It is therefore essential to ensure that spike-in standards behave as expected and to develop a set of controls that are stable enough across replicate libraries and robust to both differences in library composition and library preparation protocols.

Final paragraph of paper in Nature Biotechnology:

Internal and external controls are essential for the analysis of high-throughput data and spike-in sequences have the potential to help researchers better adjust for unwanted technical factors. With the advent of single-cell sequencing27, the role of spike-in standards should become even more important, both to account for technical variability28 and to allow the move from relative to absolute RNA expression quantification. It is therefore essential to ensure that spike- in standards behave as expected and to develop a set of controls that are stable enough across replicate libraries and robust to both differences in library composition and library preparation protocols.

Abstract of paper in the book:

Normalization of RNA-seq data is essential to ensure accurate inference of expression levels, by adjusting for sequencing depth and other more complex nuisance effects, both within and between samples. Recently, the External RNA Control Consortium (ERCC) developed a set of 92 synthetic spike-in standards that are commercially available and relatively easy to add to a typical library preparation. In this chapter, we compare the performance of several state-of-the-art normalization methods, including adaptations that directly use spike-in sequences as controls. We show that although the ERCC spike-ins could in principle be valuable for assessing accuracy in RNA-seq experiments, their read counts are not stable enough to be used for normalization purposes. We propose a novel approach to normalization that can successfully make use of control sequences to remove unwanted effects and lead to accurate estimation of expression fold-changes and tests of differential expression.

Abstract of paper in Nature Biotechnology:

Normalization of RNA-sequencing (RNA-seq) data has proven essential to ensure accurate inference of expression levels. Here, we show that usual normalization approaches mostly account for sequencing depth and fail to correct for library preparation and other more complex unwanted technical effects. We evaluate the performance of the External RNA Control Consortium (ERCC) spike-in controls and investigate the possibility of using them directly for normalization. We show that the spike-ins are not reliable enough to be used in standard global-scaling or regression-based normalization procedures. We propose a normalization strategy, called remove unwanted variation (RUV), that adjusts for nuisance technical effects by performing factor analysis on suitable sets of control genes (e.g., ERCC spike-ins) or samples (e.g., replicate libraries). Our approach leads to more accurate estimates of expression fold-changes and tests of differential expression compared to state-of-the-art normalization methods. In particular, RUV promises to be valuable for large collaborative projects involving multiple laboratories, technicians, and/or sequencing platforms.

Abstract of Gagnon-Bartsch & Speed paper that already took credit for a “new” method called RUV:

Microarray expression studies suffer from the problem of batch effects and other unwanted variation. Many methods have been proposed to adjust microarray data to mitigate the problems of unwanted variation. Several of these methods rely on factor analysis to infer the unwanted variation from the data. A central problem with this approach is the difficulty in discerning the unwanted variation from the biological variation that is of interest to the researcher. We present a new method, intended for use in differential expression studies, that attempts to overcome this problem by restricting the factor analysis to negative control genes. Negative control genes are genes known a priori not to be differentially expressed with respect to the biological factor of interest. Variation in the expression levels of these genes can therefore be assumed to be unwanted variation. We name this method “Remove Unwanted Variation, 2-step” (RUV-2). We discuss various techniques for assessing the performance of an adjustment method and compare the performance of RUV-2 with that of other commonly used adjustment methods such as Combat and Surrogate Variable Analysis (SVA). We present several example studies, each concerning genes differentially expressed with respect to gender in the brain and find that RUV-2 performs as well or better than other methods. Finally, we discuss the possibility of adapting RUV-2 for use in studies not concerned with differential expression and conclude that there may be promise but substantial challenges remain.

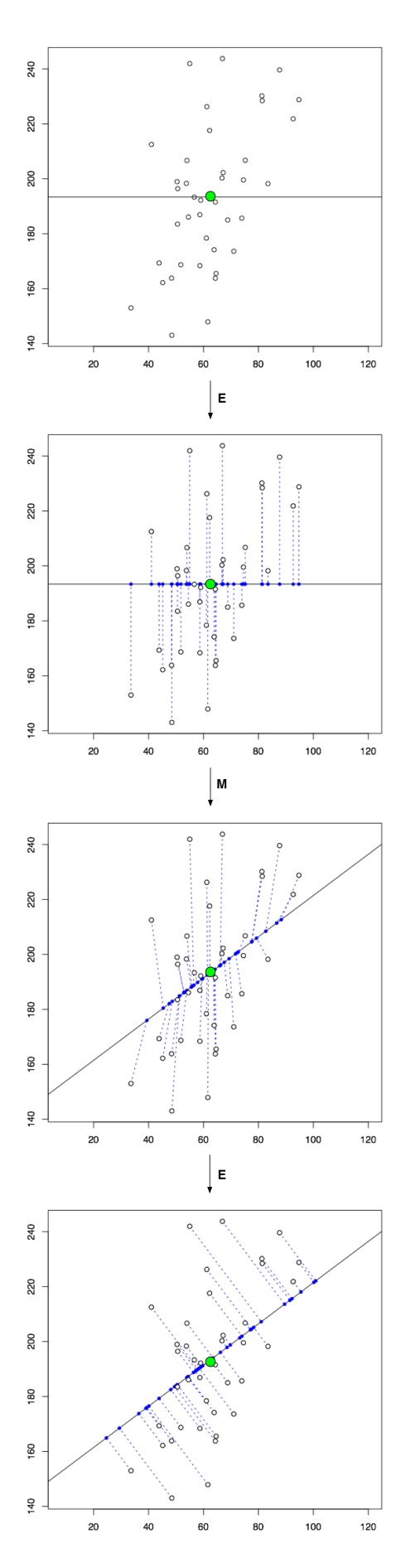

Many figures are also the same (except one that appears to have been fixed in the Nature Biotechnology paper– I leave the discovery of the figure as an exercise to the reader). Here is Figure 9.2 in the book:

The two panels appears as (b) and (c) in Figure 4 in the Nature Biotechnology paper (albeit transformed via a 90 degree rotation and reflection from the dihedral group):

Basically the whole of the book chapter and the Nature Biotechnology paper are essentially the same, down to the math notation, which even two papers removed is just a rehashing of the RUV method of Gagnon-Bartsch & Speed. A complete diff of the papers is beyond the scope of this blog post and technically not trivial to perform, but examination by eye reveals one to be a draft of the other.

Although it is acceptable in the academic community to draw on material from published research articles for expository book chapters (with permission), and conversely to publish preprints, including conference proceedings, in journals, this case is different. (a) the book chapter was refereed, exactly like a journal publication (b) the material in the chapter is not expository; it is research, (c) it was published before the Nature Biotechnology article, and presumably prepared long before, (d) the book chapter cites the Nature Biotechnology article but not vice versa and (e) the book chapter is not a particularly innovative piece of work to begin with. The method it describes and claims to be “novel”, namely RUV, was already published by Gagnon-Bartsch & Speed.

Below is a musical rendition of what has happened here:

In the Jeopardy! game show contestants are presented with questions formulated as answers that require answers in the form questions. For example, if a contestant selects “Normality for $200” she might be shown the following clue:

“The average ,”

to which she would reply “What is the maximum likelihood estimate for the mean of n independent identically distributed Gaussian random variables from which samples have been obtained?” Host Alex Trebek would immediately exclaim “That is the correct answer for $200!”

The process of doing mathematics involves repeatedly playing Jeopardy! with oneself in an unending quest to understand everything just a little bit better. The purpose of this blog post is to provide an exposition of how this works for understanding principal component analysis (PCA): I present four Jeopardy clues in the “Normality” category that all share the same answer: “What is principal component analysis?” The post was motivated by a conversation I recently had with a well-known population geneticist at a conference I was attending. I mentioned to him that I would be saying something about PCA in my talk, and that he might find what I have to say interesting because I knew he had used the method in many of his papers. Without hesitation he replied that he was well aware that PCA was not a statistical method and merely a heuristic visualization tool.

The problem, of course, is that PCA does have a statistical interpretation and is not at all an ad-hoc heuristic. Unfortunately, the previously mentioned population geneticist is not alone; there is a lot of confusion about what PCA is really about. For example, in one textbook it is stated that “PCA is not a statistical method to infer parameters or test hypotheses. Instead, it provides a method to reduce a complex dataset to lower dimension to reveal sometimes hidden, simplified structure that often underlie it.” In another one finds out that “PCA is a statistical method routinely used to analyze interrelationships among large numbers of objects.” In a highly cited review on gene expression analysis PCA is described as “more useful as a visualization technique than as an analytical method” but then in a paper by Markus Ringnér titled the same as this post, i.e. What is principal component analysis? in Nature Biotechnology, 2008, the author writes that “Principal component analysis (PCA) is a mathematical algorithm that reduces the dimensionality of the data while retaining most of the variation in the data set” (the author then avoids going into the details because “understanding the details underlying PCA requires knowledge of linear algebra”). All of these statements are both correct and incorrect and confusing. A major issue is that the description by Ringnér of PCA in terms of the procedure for computing it (singular value decomposition) is common and unfortunately does not shed light on when it should be used. But knowing when to use a method is far more important than knowing how to do it.

I therefore offer four Jeopardy! clues for principal component analysis that I think help to understand both when and how to use the method:

1. An affine subspace closest to a set of points.

Suppose we are given n numbers as in the initial example above. We are interested in finding the “closest” number to these numbers. By “closest” we mean in the sense of total squared difference. That is, we are looking for a number

such that

is minimized.

This is a (straightforward) calculus problem, solved by taking the derivative of the function above and setting it equal to zero. If we let then

and setting

we can solve for

to obtain

.

The right hand side of the equation is just the average of the n numbers and the optimization problem provides an interpretation of it as the number minimizing the total squared difference with the given numbers (note that one can replace squared difference by absolute value, i.e. minimization of , in which case the solution for m is the median; we return to this point and its implications for PCA later).

Suppose that instead of n numbers, one is given n points in . That is, point i is

. We can now ask for a point

with the property that the squared distance of

to the n points is minimized. This is asking for

.

The solution for can be obtained by minimizing each coordinate independently, thereby reducing the problem to the simpler version of numbers above, and it follows that

.

This is 0-dimensional PCA, i.e., PCA of a set of points onto a single point, and it is the centroid of the points. The generalization of this concept provides a definition for PCA:

Definition: Given n points in , principal components analysis consists of choosing a dimension

and then finding the affine space of dimension k with the property that the squared distance of the points to their orthogonal projection onto the space is minimized.

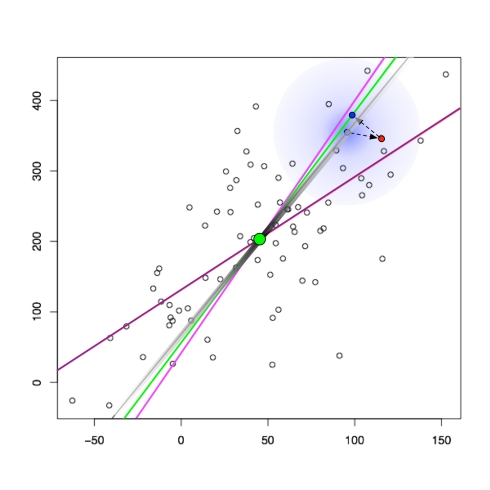

This definition can be thought of as a generalization of the centroid (or average) of the points. To understand this generalization, it is useful to think of the simplest case that is not 0-dimensional PCA, namely 1-dimensional PCA of a set of points in two dimensions:

In this case the 1-dimensional PCA subspace can be thought of as the line that best represents the average of the points. The blue points are the orthogonal projections of the points onto the “average line” (see, e.g., the red point projected orthogonally), which minimizes the squared lengths of the dashed lines. In higher dimensions line is replaced by affine subspace, and the orthogonal projections are to points on that subspace. There are a few properties of the PCA affine subspaces that are worth noting:

In this case the 1-dimensional PCA subspace can be thought of as the line that best represents the average of the points. The blue points are the orthogonal projections of the points onto the “average line” (see, e.g., the red point projected orthogonally), which minimizes the squared lengths of the dashed lines. In higher dimensions line is replaced by affine subspace, and the orthogonal projections are to points on that subspace. There are a few properties of the PCA affine subspaces that are worth noting:

- The set of PCA subspaces (translated to the origin) form a flag. This means that the PCA subspace of dimension k is contained in the PCA subspace of dimension k+1. For example, all PCA subspaces contain the centroid of the points (in the figure above the centroid is the green point). This follows from the fact that the PCA subspaces can be incrementally constructed by building a basis from eigenvectors of a single matrix, a point we will return to later.

- The PCA subspaces are not scale invariant. For example, if the points are scaled by multiplying one of the coordinates by a constant, then the PCA subspaces change. This is obvious because the centroid of the points will change. For this reason, when PCA is applied to data obtained from heterogeneous measurements, the units matter. One can form a “common” set of units by scaling the values in each coordinate to have the same variance.

- If the data points are represented in matrix form as an

matrix

, and the points orthogonally projected onto the PCA subspace of dimension k are represented as in the ambient p dimensional space by a matrix

, then

. That is,

is the matrix of rank k with the property that the Frobenius norm

is minimized. This is just a rephrasing in linear algebra of the definition of PCA given above.

At this point it is useful to mention some terminology confusion associated with PCA. Unfortunately there is no standard for describing the various parts of an analysis. What I have called the “PCA subspaces” are also sometimes called “principal axes”. The orthogonal vectors forming the flag mentioned above are called “weight vectors”, or “loadings”. Sometimes they are called “principal components”, although that term is sometimes used to refer to points projected onto a principal axis. In this post I stick to “PCA subspaces” and “PCA points” to avoid confusion.

Returning to Jeopardy!, we have “Normality for $400” with the answer “An affine subspace closest to a set of points” and the question “What is PCA?”. One question at this point is why the Jeopardy! question just asked is in the category “Normality”. After all, the normal distribution does not seem to be related to the optimization problem just discussed. The connection is as follows:

2. A generalization of linear regression in which the Gaussian noise is isotropic.

PCA has an interpretation as the maximum likelihood parameter of a linear Gaussian model, a point that is crucial in understanding the scope of its application. To explain this point of view, we begin by elaborating on the opening Jeopardy! question about Normality for $200:

The point of the question was that the average of n numbers can be interpreted as a maximum likelihood estimation of the mean of a Gaussian. The Gaussian distribution is

. Given the numbers

, the likelihood function is therefore

. The maximum of this function is the same as the maximum of its logarithm, which is

. Therefore the problem of finding the maximum likelihood estimate for the mean is equivalent to that of finding the minimum of the function

. This is exactly the optimization problem solved by 0-dimensional PCA, as we saw above. With this calculation at hand, we turn to the statistical interpretation of least squares:

Given n points in the plane (see figure above), the least squares line

(purple in figure) is the one that minimizes the sum of the squares

. That is, the least squares line is the one minimizing the sum of the squared vertical distances to the points. As with the average of numbers, the least squares line has a statistical interpretation: Suppose that there is some line

(black line in figure) that is unknown, but that “generated” the observed points, in the sense that each observed point i was obtained by perturbing the point

vertically by a random amount from a single Gaussian distribution with mean 0 and variance