You are currently browsing the category archive for the ‘sophistry’ category.

If you haven’t heard about Clubhouse yet… well, it’s the latest Silicon Valley unicorn, and the popular new chat hole for thought leaders. I heard about it for the first time a few months ago, and was kindly offered an invitation (Club house is invitation only!) so I could explore what it is all about. Clubhouse is an app for audio based social networking, and the content is, as far as I can tell, a mixed bag. I’ve listened to a handful of conversations hosted on the app.. topics include everything from bitcoin to Miami. It was interesting, at times, to hear the thoughts and opinions of some of the discussants. On the other hand, there is a lot of superficial rambling on Clubhouse as well. During a conversation about genetics I heard someone posit that biology has a lot to learn from the fashion industry. This was delivered in a “you are hearing something profound” manner, by someone who clearly knew nothing about either biology or the fashion industry, which is really too bad, because the fashion industry is quite interesting and I wouldn’t be surprised at all if biology has something to learn from it. Unfortunately, I never learned what that is.

One of the regulars on Clubhouse is Noor Siddiqui. You may not have heard of her; in fact she is officially “not notable”. That is to say, she used to have a Wikipedia page but it was deleted on the grounds that there is nothing about her that indicates notability, which is of course notable in and of itself… a paradox that says more about Wikipedia’s gatekeeping than Siddiqui (Russell 1903, Litt 2021). In any case, Siddiqui was recently part of a Clubhouse conversation on “convergence of genomics and reproductive technology” together with Carlos Bustamante (advisor to cryptocurrency based Luna DNA and soon to be professor of business technology at the University of Miami) and Balaji Srinivasan (bitcoin angel investor and entrepreneur). As it happens, Siddiqui is the CEO of a startup called “Orchid Health“, in the genomics and reproductive technology “space”. The company promises to harness “population genetics, statistical modeling, reproductive technologies, and the latest advances in genomic science” to “give parents the option to lower a future child’s genetic risk by creating embryos through in IVF and implanting embryos in the order that can reduce disease risk.” This “product” will be available later this year. Bustamante and Srinivasan are early “operators and investors” in the venture.

Orchid is not Siddiqui’s first startup. While she doesn’t have a Wikipedia page, she does have a website where she boasts of having (briefly) been a Thiel fellow and, together with her sister, starting a company as a teenager. The idea of the (briefly in existence) startup was apparently to help the now commercially defunct Google Glass gain acceptance by bringing the device to the medical industry. According to Siddiqui, Orchid is also not her first dive into statistical modeling or genomics. She notes on her website that she did “AI and genomics research”, specifically on “deep learning for genomics”. Such training and experience could have been put to good use but…

Polygenic risk scores and polygenic embryo selection

Orchid Health claims that it will “safely and naturally, protect your baby from diseases that run in your family” (the slogan “have healthy babies” is prominently displayed on the company’s website). The way it will do this is to utilize “advances in machine learning and artificial intelligence” to screen embryos created through in-vitro fertilization (IVF) for “breast cancer, prostate cancer, heart disease, atrial fibrillation, stroke, type 2 diabetes, type 1 diabetes, inflammatory bowel disease, schizophrenia and Alzheimer’s“. What this means in (a statistical geneticist’s) layman’s terms is that Orchid is planning to use polygenic risk scores derived from genome-wide association studies to perform polygenic embryo selection for complex diseases. This can be easily unpacked because it’s quite a simple proposition, although it’s far from a trivial one- the statistical genetics involved is deep and complicated.

First, a single-gene disorder is a health problem that is caused by a single mutation in the genome. Examples of such disorders include Tay-Sachs disease, sickle cell anaemia, Huntington’s disease, Duchenne muscular dystrophy, and many other diseases. A “complex disease”, also called a multifactorial disease, is a disease that has a genetic component, but one that involves multiple genes, i.e. it is not a single-gene disorder. Crucially, complex diseases may involve effects of environmental factors, whose role in causing disease may depend on the genetic composition of an individual. The list of diseases on Orchid’s website, including breast cancer, prostate cancer, heart disease, atrial fibrillation, stroke, type 2 diabetes, type 1 diabetes, inflammatory bowel disease, schizophrenia and Alzheimer’s disease are all examples of complex (multifactorial) diseases.

To identify genes that associate with a complex disease, researchers perform genome-wide association studies (GWAS). In such studies, researchers typically analyze several million genomic sites in a large numbers of individuals with and without a disease (used to be thousands of individuals, nowadays hundreds of thousands or millions) and perform regressions to assess the marginal effect at each locus. I italicized the word associate above, because genome-wide association studies do not, in and of themselves, point to genomic loci that cause disease. Rather, they produce, as output, lists of genomic loci that have varying degrees of association with the disease or trait of interest.

Polygenic risk scores (PRS), which the Broad Institute claims to have discovered (narrator: they were not discovered at the Broad Institute), are a way to combine the multiple genetic loci associated with a complex disease from a GWAS. Specifically, a PRS for a complex disease is given by

where the sum is over different genetic loci, the

are coded genetic markers for an individual at the

loci, and the

are weights based on the marginal effects derived from a GWAS. The concept of a PRS is straightforward, but the details are complicated, in some cases subtle, and generally non-trivial. There is debate over how many genomic loci should be used in computing a polygenic risk score given that the vast majority of marginal effects are very close to zero (Janssens 2019), lots of ongoing research about how to set the weights to account for issues such as bias caused by linkage disequilibrium (Vilhjálmsson et al. 2015, Shin et al. 2017, Newcombe et al. 2019, Ge et al. 2019, Lloyd-Jones et al. 2019, Pattee and Pan 2020, Song et al. 2020), and continuing discussions about the ethics of using polygenic risk scores in the clinic (Lewis and Green 2021).

While much of the discussion around PRS applications centers on applications such as determining diagnostic testing frequency (Wald and Old 2019), polygenic embryo selection (PES) posits that polygenic risk scores should be taken a step further and evaluated for embryos to be used as a basis for discarding, or selecting, specific embryos for in vitro fertilization implantation. The idea has been widely criticized and critiqued (Karavani et al. 2019). It has been described as unethical, morally repugnant, and concerns about its use for eugenics have been voiced by many. Underlying these criticisms is the fact that the technical issues with PES using PRS are manifold.

Poor penetrance

The term “penetrance” for a disease refers to the proportion of individuals with a particular genetic variant that have the disease. Many single-gene disorders have very high penetrance. For example, F508del mutation in the CFTR gene is 100% penetrant for cystic fibrosis. That is, 100% of people who are homozygous for this variant, meaning that both copies of their DNA have a deletion of the phenylalanine amino acid in position 508 of their CFTR gene, will have cystic fibrosis. The vast majority of variants associated with complex diseases have very low penetrance. For example, in schizophrenia, the penetrance of “high risk” de novo copy number variants (in which there are variable copies of DNA at a genomic loci) was found to be between 2% and 7.4% (Vassos et al 2010). The low penetrance at large numbers of variants for complex diseases was precisely the rationale for developing polygenic risk scores in the first place, the idea being that while individual variants yield small effects, perhaps in (linear) combination they can have more predictive power. While it is true that combining variants does yield more predictive power for complex diseases, unfortunately the accuracy is, in absolute terms, very low.

The reason for low predictive power of PRS is explained well in (Wald and Old 2020) and is illustrated for coronary artery disease (CAD) in (Rotter and Lin 2020):

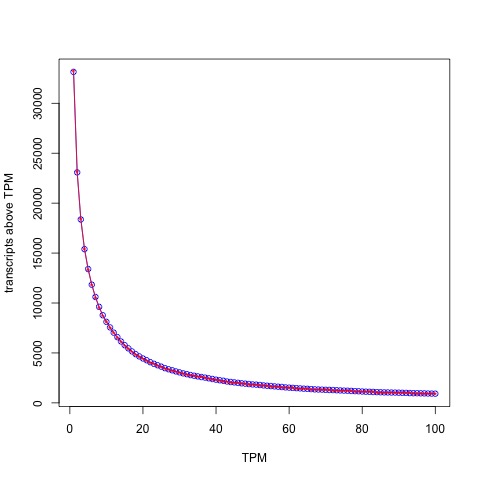

The issue is that while the polygenic risk score distribution may indeed be shifted for individuals with a disease, and while this shift may be statistically significant resulting in large odds ratios, i.e. much higher relative risk for individuals with higher PRS, the proportion of individuals in the tail of the distributions who will or won’t develop the disease will greatly affect the predictive power of the PRS. For example, Wald and Old note that PRS for CAD from (Khera et al. 2018) will confer a detection rate of only 15% with a false positive rate of 5%. At a 3% false positive rate the detection rate would be only 10%. This is visible in the figure above, where it is clear that control of the false positive right (i.e. thresholding at the extreme right-hand side with high PRS score) will filter out many (most) affected individuals. The same issue is raised in the excellent review on PES of (Lázaro-Muńoz et al. 2020). The authors explain that “even if a PRS in the top decile for schizophrenia conferred a nearly fivefold increased risk for a given embryo, this would still yield a >95% chance of not developing the disorder.” It is worth noting in this context, that diseases like schizophrenia are not even well defined phenotypically (Mølstrøm et al. 2020), which is another complex matter that is too involved to go into detail here.

In a recent tweet, Siddiqui describes natural conception as a genetic lottery, and suggests that Orchid Health, by performing PES, can tilt the odds in customers’ favor. To do so the false positive rate must be low, or else too many embryos will be discarded. But a 15% sensitivity is highly problematic considering the risks inherent with IVF in the first place (Kamphuis et al. 2014):

To be concrete, an odds ratio of 2.8 for cerebral palsy needs to be balanced against the fact that in the Khera et al. study, only 8% of individuals had an odds ratio >3.0 for CAD. Other diseases are even worse, in this sense, than CAD. In atrial fibrillation (one of the diseases on Orchid Health’s list), only 9.3% of the individuals in the top 0.44% of the atrial fibrillation PRS actually had atrial fibrillation (Choi et al 2019).As one starts to think carefully about the practical aspects and tradeoffs in performing PES, other issues, resulting from the low penetrance of complex disease variants, come into play as well. (Lencz et al. 2020) examine these tradeoffs in detail, and conclude that “the differential performance of PES across selection strategies and risk reduction metrics may be difficult to communicate to couples seeking assisted reproductive technologies… These difficulties are expected to exacerbate the already profound ethical issues raised by PES… which include stigmatization, autonomy (including “choice overload”, and equity. In addition, the ever-present specter of eugenics may be especially salient in the context of the LRP (lowest-risk prioritization) strategy.” They go on to “call for urgent deliberations amongst key stakeholders (including researchers, clinicians, and patients) to address governance of PES and for the development of policy statements by professional societies.”

Pleiotropy predicaments

I remember a conversation I had with Nicolas Bray several years ago shortly after the exciting discovery of CRISPR/Cas9 for genome editing, on the implications of the technology for improving human health. Nick pointed out that the development of genomics had been curiously “backwards”. Thirty years ago, when human genome sequencing was beginning in earnest, the hope was that with the sequence at hand we would be able to start figuring out the function of genes, and even individual base pairs in the genome. At the time, the human genome project was billed as being able to “help scientists search for genes associated with human disease” and it was imagined that “greater understanding of the genetic errors that cause disease should pave the way for new strategies in diagnosis, therapy, and disease prevention.” Instead, what happened is that genome editing technology has arrived well before we have any idea of what the vast majority of the genome does, let alone the implications of edits to it. Similarly, while the coupling of IVF and genome sequencing makes it possible to select embryos based on genetic variants today, the reality is that we have no idea how the genome functions, or what the vast majority of genes or variants actually do.

One thing that is known about the genome is that it is chock full of pleiotropy. This is statistical genetics jargon for the fact that variation at a single locus in the genome can affect many traits simultaneously. Whereas one might think naïvely that there are distinct genes affecting individual traits, in reality the genome is a complex web of interactions among its constituent parts, leading to extensive pleiotropy. In some cases pleiotropy can be antagonistic, which means that a genomic variant may simultaneously be harmful and beneficial. A famous example of this is the mutation to the beta globin gene that confers malaria resistance to heterozygotes (individuals with just one of their DNA copies carrying the mutation) and sickle cell anemia to homozygotes (individuals with both copies of their DNA carrying the mutation).

In the case of complex diseases we don’t really know enough, or anything, about the genome to be able to truly assess pleiotropy risks (or benefits). But there are some worries already. For example, HLA Class II genes are associated with Type I and non-insulin treated Type 2 diabetes (Jacobi et al 2020), Parkinson’s disease (e.g. James and Georgopolous 2020, which also describes an association with dementia) and Alzheimer’s (Wang and Xing 2020). PES that results in selection against the variants associated with these diseases could very well lead to population susceptibility to infectious disease. Having said that, it is worth repeating that we don’t really know if the danger is serious, because we don’t have any idea what the vast majority of the genome does, nor the nature of antagonistic pleiotropy present in it. Almost certainly by selecting for one trait according to PRS, embryos will also be selected for a host of other unknown traits.

Thus, what can be said is that while Orchid Health is trying to convince potential customers to not “roll the dice“, by ignoring the complexities of pleiotropy and its implications for embryo selection, what the company is actually doing is in fact rolling the dice for its customers (for a fee).

Population problems

One of Orchid Health’s selling points is that unlike other tests that “look at 2% of only one partner’s genome…Orchid sequences 100% of both partner’s genomes” resulting in “6 billion data points”. This refers to the “couples report”, which is a companion product of sorts to the polygenic embryo screening. The couples report is assembled by using the sequenced genomes of parents to simulate the genomes of potential babies, each of which is evaluated for PRS’ to provide a range of (PRS based) disease predictions for the couples potential children. Sequencing a whole genome is a lot more expensive that just assessing single nucleotide polymorphisms (SNPs) in a panel. That may be one reason that most direct-to-consumer genetics is based on polymorphism panels rather than sequencing. There is another: the vast majority of variation in the genome occurs at a known polymorphic sites (there are a few million out of the approximately 3 billion base pairs in the genome), and to the extent that a variant might associate with a disease, it is likely that a neighboring common variant, which will be inherited together with the causal one, can serve as a proxy. There are rare variants that have been shown to associate with disease, but whether or not they explain can explain a large fraction of (genetic) disease burden is still an open question (Young 2019). So what has Siddiqui, who touts the benefits of whole-genome sequencing in a recent interview, discovered that others such as 23andme have missed?

It turns out there is value to whole-genome sequencing for polygenic risk score analysis, but it is when one is performing the genome-wide association studies on which the PRS are based. The reason is a bit subtle, and has to do with differences in genetics between populations. Specifically, as explained in (De La Vega and Bustamante, 2018), variants that associate with a disease in one population may be different than variants that associate with the disease in another population, and whole-genome sequencing across populations can help to mitigate biases that result when restricting to SNP panels. Unfortunately, as De La Vega and Bustamante note, whole-genome sequencing for GWAS “would increase costs by orders of magnitude”. In any case, the value of whole-genome sequencing for PRS lies mainly in identifying relevant variants, not in assessing risk in individuals.

The issue of population structure affecting PRS unfortunately transcends considerations about whole-genome sequencing. (Curtis 2018) shows that PRS for schizophrenia is more strongly associated with ancestry than with the disease. Specifically, he shows that “The PRS for schizophrenia varied significantly between ancestral groups and was much higher in African than European HapMap subjects. The mean difference between these groups was 10 times as high as the mean difference between European schizophrenia cases and controls. The distributions of scores for African and European subjects hardly overlapped.” The figure from Curtis’ paper showing the distribution of PRS for schizophrenia across populations is displayed below (the three letter codes at the bottom are abbreviations for different population groups; CEU stands for Northern Europeans from Utah and is the lowest).

The dependence of PRS on population is a problem that is compounded by a general problem with GWAS, namely that Europeans and individuals of European descent have been significantly oversampled in GWAS. Furthermore, even within a single ancestry group, the prediction accuracy of PRS can depend on confounding factors such as socio-economic status (Mostafavi et al. 2020). Practically speaking, the implications for PES are beyond troubling. The PRS scores in the reports customers of Orchid Health may be inaccurate or meaningless due to not only the genetic background or admixture of the parents involved, but also other unaccounted for factors. Embryo selection on the basis of such data becomes worse than just throwing dice, it can potentially lead to unintended consequences in the genomes of the selected embryos. (Martin et al. 2019) show unequivocally that clinical use of polygenic risk scores may exacerbate health disparities.

People pathos

The fact that Silicon Valley entrepreneurs are jumping aboard a technically incoherent venture and are willing to set aside serious ethical and moral concerns is not very surprising. See, e.g. Theranos, which was supported by its investors despite concerns being raised about the technical foundations of the company. After a critical story appeared in the Wall Street Journal, the company put out a statement that

“[Bad stories]…come along when you threaten to change things, seeded by entrenched interests that will do anything to prevent change, but in the end nothing will deter us from making our tests the best and of the highest integrity for the people we serve, and continuing to fight for transformative change in health care.”

While this did bother a few investors at the time, many stayed the course for a while longer. Siddiqui uses similar language, brushing off criticism by complaining about paternalism in the health care industry and gatekeeping, while stating that

“We’re in an age of seismic change in biotech – the ability to sequence genomes, the ability to edit genomes, and now the unprecedented ability to impact the health of a future child.”

Her investors, many of whom got rich from cryptocurrency trading or bitcoin, cheer her on. One of her investors is Brian Armstrong, CEO of Coinbase, who believes “[Orchid is] a step towards where we need to go in medicine.” I think I can understand some of the ego and money incentives of Silicon Valley that drive such sentiment. But one thing that disappoints me is that scientists I personally held in high regard, such as Jan Liphardt (associate professor of Bioengineering at Stanford) who is on the scientific advisory board and Carlos Bustamante (co-author of the paper about population structure associated biases in PRS mentioned above) who is an investor in Orchid Health, have associated themselves with the company. It’s also very disturbing that Anne Wojcicki, the CEO of 23andme whose team of statistical geneticists understand the subtleties of PRS, still went ahead and invested in the company.

Conclusion

Orchid Health’s polygenic embryo selection, which it will be offering later this year, is unethical and morally repugnant. My suggestion is to think twice before sending them three years of tax returns to try to get a discount on their product.

Steven Miller is a math professor at Williams College who specializes in number theory and theoretical probability theory. A few days ago he published a “declaration” in which he performs an “analysis” of phone bank data of registered Republicans in Pennsylvania. The data was provided to him by Matt Braynyard, who led Trump’s data team during the 2016. Miller frames his “analysis” as an attempt to “estimate the number of fraudulent ballots in Pennsylvania”, and his analysis of the data leads him to conclude that

“almost surely…the number of ballots requested by someone other than the registered Republican is between 37,001 and 58,914, and almost surely the number of ballots requested by registered Republicans and returned but not counted is in the range from 38,910 to 56,483.”

A review of Miller’s “analysis” leads me to conclude that his estimates are fundamentally flawed and that the data as presented provide no evidence of voter fraud.

This conclusion is easy to arrive at. The declaration claims (without a reference) that there were 165,412 mail-in ballots requested by registered Republicans in PA, but that “had not arrived to be counted” as of November 16th, 2020. The data Miller analyzed was based on an attempt to call some of these registered Republicans by phone to assess what happened to their ballots. The number of phone calls made, according to the declaration, is 23,184 = 17,000 + 3,500 + 2,684. The number 17,000 consists of phone calls that did not provide information either because an answering machine picked up instead of a person, or a person picked up and summarily hung up. 3,500 numbers were characterized as “bad numbers / language barrier”, and 2,684 individuals answered the phone. Curiously, Miller writes that “Almost 20,000 people were called”, when in fact 23,184 > 20,000.

In any case, clearly many of the phone numbers dialed were simply wrong numbers, as evident by the number of “bad” calls: 3,500. It’s easy to imagine how this can happen: confusion because some individuals share a name, phone numbers have changed, people move, the phone call bank makes an error when dialing etc. Let be the fraction of phone numbers out of the 23,184 that were “bad”, i.e. incorrect. We can estimate

by noting that we have some information about it: we know that the 3,500 “bad numbers” were bad (by definition). Additionally, it is reported in the declaration that 556 people literally said that they did not request a ballot, and there is no reason not to take them at their word. We don’t know what fraction of the 17,000 individuals called and did not pick up or hung up were wrong numbers, but we do know that the fraction out of the total must equal the fraction out of the 17,000 + those we know for sure were bad numbers, i.e.

.

Solving for we find that

. I’m surprised the number is so low. One would expect that individuals who requested ballots, but then didn’t send them in, would be enriched for people who have recently moved or are in the process of moving, or have other issues making it difficult to reach them or impossible to reach them at all.

The fraction of bad calls derived translates to about 1,700 bad numbers out of the 2,684 people that were reached. This easily explains not only the 556 individuals who said they did not request a ballot, but also the 463 individuals who said that they mailed back their ballots. In the case of the latter there is no irregularity; the number of bad calls suggests that all those individuals were reached in error and their ballots were legitimately counted so they weren’t part of the 165,412. It also explains the 544 individuals who said they voted in person.

That’s it. The data don’t point to any fraud or irregularity, just a poorly design poll with poor response rates and lots of erroneous information due to bad phone numbers. There is nothing to explain. Miller, on the other hand, has some things to explain.

First, I note that his declaration begins with a signed page asserting various facts about Steven Miller and the analysis he performed. Notably absent from the page, or anywhere else in the document, is a disclosure of funding source for the work and of conflicts of interest. On his work webpage, Miller specifically states that one should always acknowledge funding support.

Second, if Miller really wanted to understand the reason why some ballots were requested for mail-in, but had not yet arrived to be counted, he would also obtain data from Democrats. That would provide a control on various aspects of the analysis, and help to establish whether irregularities, if they were to be detected, were of a partisan nature. Why did Miller not include an analysis of such data?

Third, one might wonder why Steven Miller chose to publish this “declaration”. Surely a professor who has taught probability and statistics for 15 years (as Miller claims he has) must understand that his own “analysis” is fundamentally flawed, right? Then again, I’ve previously found that excellent pure mathematicians are prone to falling into a data analysis trap, i.e. a situation where their lack of experience analyzing real-world datasets leads them to believe naïve analysis that is deeply flawed. To better understand whether this might be the case with Miller, I examined his publication record, which he has shared publicly via Google Scholar, to see whether he has worked with data. The first thing I noticed was that he has published more than 700 articles (!) and has an h-index of 47 for a total of 8,634 citations… an incredible record for any professor, and especially for a mathematician. A Google search for his name displays this impressive number of citations:

As it turns out, his impressive publication record is a mirage. When I took a closer look and found that many of the papers he lists on his Google Scholar page are not his, but rather articles published by other authors with the name S Miller. “His” most cited article was published in 1955, a year that transpired well before he was born. Miller’s own most cited paper is a short unpublished tutorial on least squares (I was curious and reviewed it as well only to find some inaccuracies but hey, I don’t work for this guy).

I will note that in creating his Google Scholar page, Miller did not just enter his name and email address (required). He went to the effort of customizing the page, including the addition of keywords and a link to his homepage, and in doing so followed his own general advice to curate one’s CV (strangely, he also dispenses advice on job interviews, including about shaving- I guess only women interview for jobs?). But I digress: the question is, why is his Google Scholar page display massively inflated publication statistics based on papers that are not his? I’ve seen this before, and in one case where I had hard evidence that it was done deliberately to mislead I reported it as fraud. Regardless of Miller’s motivations, by looking at his actual publications I confirmed what I suspected, namely that he has hardly any experience analyzing real world data. I’m willing to chalk up his embarrassing “declaration” to statistics illiteracy and naïveté.

In summary, Steven Miller’s declaration provides no evidence whatsoever of voter fraud in Pennsylvania.

Lior Pachter

Division of Biology and Biological Engineering &

Department of Computing and Mathematical Sciences California Institute of Technology

Abstract

A recently published pilot study on the efficacy of 25-hydroxyvitamin D3 (calcifediol) in reducing ICU admission of hospitalized COVID-19 patients, concluded that the treatment “seems able to reduce the severity of disease, but larger trials with groups properly matched will be required go show a definitive answer”. In a follow-up paper, Jungreis and Kellis re-examine this so-called “Córdoba study” and argue that the authors of the study have undersold their results. Based on a reanalysis of the data in a manner they describe as “rigorous” and using “well established statistical techniques”, they urge the medical community to “consider testing the vitamin D levels of all hospitalized COVID-19 patients, and taking remedial action for those who are deficient.” Their recommendation is based on two claims: in an examination of unevenness in the distribution of one of the comorbidities between cases and controls, they conclude that there is “no evidence of incorrect randomization”, and they present a “mathematical theorem” to make the case that the effect size in the Córdoba study is significant to the extent that “they can be confident that if assignment to the treatment group had no effect, we would not have observed these results simply due to chance.”

Unfortunately, the “mathematical analysis” of Jungreis and Kellis is deeply flawed, and their “theorem” is vacuous. Their analysis cannot be used to conclude that the Córdoba study shows that calcifediol significantly reduces ICU admission of hospitalized COVID- 19 patients. Moreover, the Córdoba study is fundamentally flawed, and therefore there is nothing to learn from it.

The Córdoba study

The Córdoba study, described by the authors as a pilot, was ostensibly a randomized controlled trial, designed to determine the efficacy of 25-hydroxyvitamin D3 in reducing ICU admission of hospitalized COVID-19 patients. The study consisted of 76 patients hospitalized for COVID-19 symptoms, with 50 of the patients treated with calcifediol, and 26 not receiving treatment. Patients were administered “standard care”, which according to the authors consisted of “a combination of hydroxychloroquine, azithromycin, and for patients with pneumonia and NEWS score 5, a broad spectrum antibiotic”. Crucially, admission to the ICU was determined by a “Selection Committee” consisting of intensivists, pulmonologists, internists, and members of an ethics committee. The Selection Committee based ICU admission decisions on the evaluation of several criteria, including presence of comorbidities, and the level of dependence of patients according to their needs and clinical criteria.

The result of the Córdoba trial was that only 1/50 of the treated patients was admitted to the ICU, whereas 13/26 of the untreated patients were admitted (p-value = 7.7 ∗ 10−7 by Fisher’s exact test). This is a minuscule p-value but it is meaningless. Since there is no record of the Selection Committee deliberations, it impossible to know whether the ICU admission of the 13 untreated patients was due to their previous high blood pressure comorbidity. Perhaps the 11 treated patients with the comorbidity were not admitted to the ICU because they were older, and the Selection Committee considered their previous higher blood pressure to be more “normal” (14/50 treatment patients were over the age of 60, versus only 5/26 of the untreated patients).

Figure 1: Table 2 from [1] showing the comorbidities of patients. It is reproduced by virtue of [1] being published open access under the CC-BY license.

The fact that admission to the ICU could be decided in part based on the presence of co-morbidities, and that there was a significant imbalance in one of the comorbidities, immediately renders the study results meaningless. There are several other problems with it that potentially confound the results: the study did not examine the Vitamin D levels of the treated patients, nor was the untreated group administered a placebo. Most importantly, the study numbers were tiny, with only 76 patients examined. Small studies are notoriously problematic, and are known to produce large effect sizes [9]. Furthermore, sloppiness in the study does not lead to confidence in the results. The authors state that the “rigorous protocol” for determining patient admission to the ICU is available as Supplementary Material, but there is no Supplementary Material distributed with the paper. There is also an embarrassing typo: Fisher’s exact test is referred to twice as “Fischer’s test”. To err once in describing this classical statistical test may be regarded as misfortune; to do it twice looks like carelessness.

A pointless statistics exercise

The Córdoba study has not received much attention, which is not surprising considering that by the authors’ own admission it was a pilot that at best only motivates a properly matched and powered randomized controlled trial. Indeed, the authors mention that such a trial (the COVIDIOL trial), with data being collected from 15 hospitals in Spain, is underway. Nevertheless, Jungreis and Kellis [3], apparently mesmerized by the 7.7 ∗ 10−7 p-value for ICU admission upon treatment, felt the need to “rescue” the study with what amounts to faux statistical gravitas. They argue for immediate consideration of testing Vitamin D levels of hospitalized patients, so that “deficient” patients can be administered some form of Vitamin D “to the extent it can be done safely”. Their message has been noticed; only a few days after [3] appeared the authors’ tweet to promote it has been retweeted more than 50 times [8].

Jungreis and Kellis claim that the p-value for the effect of calcifediol on patients is so significant, that in and of itself it merits belief that administration of calcifediol does, in fact, prevent admission of patients to ICUs. To make their case, Jungreis and Kellis begin by acknowledging that imbalance between the treated and untreated groups in the previous high blood pressure comorbidity may be a problem, but claim that there is “no evidence of incorrect randomization.” Their argument is as follows: they note that while the p-value for the imbalance in the previous high blood pressure comorbidity is 0.0023, it should be adjusted for the fact that there are 15 distinct comorbidities, and that just by chance, when computing so many p-values, one might be small. First, an examination of Table 2 in [1] (Figure 1) shows that there were only 14 comorbidities assessed, as none of the patients had previous chronic kidney disease. Thus, the number 15 is incorrect. Second, Jungreis and Kellis argue that a Bonferroni correction should be applied, and that this correction should be based on 30 tests (=15 × 2). The reason for the factor of 2 is that they claim that when testing for imbalance, one should test for imbalance in both directions. By applying the Bonferroni correction to the p-values, they derive a “corrected” p-value for previous high blood pressure being imbalanced between groups of 0.069. They are wrong on several counts in deriving this number. To illustrate the problems we work through the calculation step-by-step:

The question we want to answer is as follows: given that there are multiple comorbidities, is there is a significant imbalance in at least one comorbidity. There are several ways to test for this, with the simplest being Šidák’s correction [10] given by

where m is the minimum p-value among the comorbidities, and n is the number of tests. Plugging in m = 0.0023 (the smallest p-value in Table 2 of [1]) and n = 14 (the number of comorbidities) one gets 0.032 (note that the Bonferroni correction used by Jungreis And Kellis is the Taylor approximation to the Šidák correction when m is small). The Šidák correction is based on an assumption that the tests are independent. However, that is certainly not the case in the Córdoba study. For example, having at least one prognostic factor is one of the comorbidities tabulated. In other words, the p-value obtained is conservative. The calculation above uses n = 14, but Jungreis and Kellis reason that the number of tests is 30 = 15 × 2, to take into account an imbalance in either the treated or untreated direction. Here they are assuming two things: that two-sided tests for each comorbidity will produce double the p-value of a one-sided test, and that two sided tests are the “correct” tests to perform. They are wrong on both counts. First, the two-sided Fisher exact test does not, in general produce a p-value that is double the 1-sided test. The study result is a good example: 1/49 treated patients admitted to the ICU vs. 13/26 untreated patients produces a p-value of 7.7 ∗ 10−7 for both the 1-sided and 2-sided tests. Jungreis and Kellis do not seem to know this can happen, nor understand why; they go to great lengths to explain the importance of conducting a 1-sided test for the study result. Second, there is a strong case to be made that a 1-sided test is the correct test to perform for the comorbidities. The concern is not whether there was an imbalance of any sort, but whether the imbalance would skew results by virtue of the study including too many untreated individuals with comorbidities. In any case, if one were to give Jungreis and Kellis the benefit of the doubt, and perform a two sided test, the corrected p-value for the previous high blood pressure comorbidity is 0.06 and not 0.069.

The most serious mistake that Jungreis and Kellis make, however, is in claiming that one can accept the null hypothesis of a hypothesis test when the p-value is greater than 0.05. The p-value they obtain is 0.069 which, even if it is taken at face value, is not grounds for claiming, as Jungreis and Kellis do, that “this is not significant evidence that the assignment was not random” and reason to conclude that there is “no evidence of incorrect randomization”. That is not how p-values work. A p-value less than 0.05 allows one to reject the null hypothesis (assuming 0.05 is the threshold chosen), but a p-value above the chosen threshold is not grounds for accepting the null. Moreover, the corrected p-value is 0.032 which is certainly grounds for rejecting the null hypothesis that the randomization was random.

Correction of the incorrect Jungreis and Kellis statistics may be a productive exercise in introductory undergraduate statistics for some, but it is pointless insofar as assessing the Córdoba study. While the extreme imbalance in the previous high blood pressure comorbidity is problematic because patients with the comorbidity may be more likely to get sick and require ICU admission, the study was so flawed that the exact p-value for the imbalance is a moot point. Given that the presence of comorbidities, not just their effect on patients, was a factor in determining which patients were admitted to the ICU, the extreme imbalance in the previous high blood pressure comorbidity renders the result of the study meaningless ex facie.

A definition is not a theorem is not proof of efficacy

In an effort to fend off criticism that the comorbidities of patients were improperly balanced in the study, Jungreis and Kellis go further and present a “theorem” they claim shows that there was a minuscule chance that an uneven distribution of comorbidities could render the study results not significant. The “theorem” is stated twice in their paper, and I’ve copied both theorem statements verbatim from their paper:

Theorem 1 In a randomized study, let p be the p-value of the study results, and let q be the probability that the randomization assigns patients to the control group in such a way that the values of Pprognostic(Patient) are sufficiently unevenly distributed between the treatment and control groups that the result of the study would no longer be statistically significant at the 95% level after p controlling for the prognostic risk factors. Then .

According to Jungreis and Kellis, Pprognostic(Patient) is the following: “There can be any number of prognostic risk factors, but if we knew what all of them were, and their effect sizes, and the interactions among them, we could combine their effects into a single number for each patient, which is the probability, based on all known and yet-to-be discovered risk factors at the time of hospital admission, that the patient will require ICU care if not given the calcifediol treatment. Call this (unknown) probability Pprognostic(Patient).”

The theorem is restated in the Methods section of Jungreis and Kellis paper as follows:

Theorem 2 In a randomized controlled study, let p be the p-value of the study outcome, and let q be the probability that the randomization distributes all prognostic risk factors combined sufficiently unevenly between the treatment and control groups that when controlling for these prognostic risk p factors the outcome would no longer be statistically significant at the 95% level. Then .

While it is difficult to decipher the language the “theorem” is written in, let alone its meaning (note Theorem 1 and Theorem 2 are supposedly the same theorem), I was able to glean something about its content from reading the “proof”. The mathematical content of whatever the theorem is supposed to mean, is the definition of conditional probability, namely that if A and B are events with , then

.

To be fair to Jungreis and Kellis, the “theorem” includes the observation that

This is not, by any stretch of the imagination, a “theorem”; it is literally the definition of conditional probability followed by an elementary inequality. The most generous interpretation of what Jungreis and Kellis were trying to do with this “theorem”, is that they were showing that the p-value for the study is so small, that it is small even after being multiplied by 20. There are less generous interpretations.

Does Vitamin D intake reduce ICU admission?

There has been a lot of interest in Vitamin D and its effects on human health over the past decade [2], and much speculation about its relevance for COVID-19 susceptibility and disease severity. One interesting result on disease susceptibility was published recently: in a study of 489 patients, it was found that the relative risk of testing positive for COVID-19 was 1.77 times greater for patients with likely deficient vitamin D status compared with patients with likely sufficient vitamin D status [7]. However, definitive results on Vitamin D and its relationship to COVID- 19 will have to await larger trials. One such trial, a large randomized clinical trial with 2,700 individuals sponsored by Brigham and Women’s Hospital, is currently underway [4]. While this study might shed some light on Vitamin D and COVID-19, it is prudent to keep in mind that the outcome is not certain. Vitamin D levels are confounded with many socioeconomic factors, making the identification of causal links difficult. In the meantime, it has been suggested that it makes sense for individuals to maintain reference nutrient intakes of Vitamin D [6]. Such a public health recommendation is not controversial.

As for Vitamin D administration to hospitalized COVID-19 patients reducing ICU admission, the best one can say about the Córdoba study is that nothing can be learned from it. Unfortunately, the poor study design, small sample size, availability of only summary statistics for the comorbidities, and imbalanced comorbidities among treated and untreated patients render the data useless. While it may be true that calcifediol administration to hospital patients reduces subsequent ICU admission, it may also not be true. Thus, the follow-up by Jungreis and Kellis is pointless at best. At worst, it is irresponsible propaganda, advocating for potentially dangerous treatment on the basis of shoddy arguments masked as “rigorous and well established statistical techniques”. It is surprising to see Jungreis and Kellis argue that it may be unethical to conduct a placebo randomized controlled trial, which is one of the most powerful tools in the development of safe and effective medical treatments. They write “the ethics of giving a placebo rather than treatment to a vitamin D deficient patient with this potentially fatal disease would need to be evaluated.” The evidence for such a policy is currently non-existent. On the other hand, there are plenty of known risks associated with excess Vitamin D [5].

References

- Marta Entrenas Castillo, Luis Manuel Entrenas Costa, José Manuel Vaquero Barrios, Juan Francisco Alcalá Díaz, José López Miranda, Roger Bouillon, and José Manuel Quesada Gomez. Effect of calcifediol treatment and best available therapy versus best available therapy on intensive care unit admission and mortality among patients hospitalized for COVID-19: A pilot randomized clinical study. The Journal of steroid biochemistry and molecular biology, 203:105751, 2020.

- Michael F Holick. Vitamin D deficiency. New England Journal of Medicine, 357(3):266–281, 2007.

- Irwin Jungreis and Manolis Kellis. Mathematical analysis of Córdoba calcifediol trial suggests strong role for Vitamin D in reducing ICU admissions of hospitalized COVID-19 patients. medRxiv, 2020.

- JoAnn E Manson. https://clinicaltrials.gov/ct2/show/nct04536298.

- Ewa Marcinowska-Suchowierska, Małgorzata Kupisz-Urbańska, Jacek Łukaszkiewicz, Paweł Płudowski, and Glenville Jones. Vitamin D toxicity–a clinical perspective. Frontiers in endocrinology, 9:550, 2018

- Adrian R Martineau and Nita G Forouhi. Vitamin D for COVID-19: a case to answer? The Lancet Diabetes & Endocrinology, 8(9):735–736, 2020.

- David O Meltzer, Thomas J Best, Hui Zhang, Tamara Vokes, Vineet Arora, and Julian Solway. Association of vitamin D status and other clinical characteristics with COVID-19 test results. JAMA network open, 3(9):e2019722–e2019722, 2020.

- Vivien Shotwell. https://tweetstamp.org/1327281999137091586.

- Robert Slavin and Dewi Smith. The relationship between sample sizes and effect sizes in systematic reviews in education. Educational evaluation and policy analysis, 31(4):500–506, 2009.

- Lynn Yi, Harold Pimentel, Nicolas L Bray, and Lior Pachter. Gene-level differential analysis at transcript-level resolution. Genome biology, 19(1):53, 2018.

“It is not easy when people start listening to all the nonsense you talk. Suddenly, there are many more opportunities and enticements than one can ever manage.”

– Michael Levitt, Nobel Prize in Chemistry, 2013

In 1990 Glendon McGregor, a restaurant waiter in Pretoria, South Africa, set up an elaborate hoax in which he posed as the crown prince of Liechtenstein to organize for himself a state visit to his own country. Amazingly, the ruse lasted for two weeks, and during that time McGregor was wined and dined by numerous South African dignitaries. He had a blast in his home town, living it up in a posh hotel, and enjoying a trip to see the Blue Bulls in Loftus Versfeld stadium. The story is the subject of the 1993 Afrikaans film “Die Prins van Pretoria” (The Prince of Pretoria). Now, another Pretorian is at it, except this time not for two weeks but for several months. And, unlike McGregor’s hoax, this one does not just embarrass a government and leave it with a handful of hotel and restaurant bills. This hoax risks lives.

Michael Levitt, a Stanford University Professor of structural biology and winner of the Nobel Prize in Chemistry in 2013, wants you to believe the COVID-19 pandemic is over in the US. He claimed it ended on August 22nd, with a total of 170,000 deaths (there are now over 200,000 with hundreds of deaths per day). He claims those 170,000 deaths weren’t even COVID-19 deaths, and since the virus is not very dangerous, he suggests you infect yourself. How? He proposes you set sail on a COVID-19 cruise.

Levitt’s lunacy began with an attempt to save the world from epidemiologists. Levitt presumably figured this would not be a difficult undertaking, because, he has noted, “epidemiologists see their job not as getting things correct“. I guess he figured that he could do better than that. On February 25th of this year, at a time when there had already been 2,663 deaths due to the SARS-CoV-2 virus in China but before the World Health Organization had declared the COVID-19 outbreak a pandemic, he delivered what sounded like good news. He predicted that the virus had almost run its course, and that the final death toll in China would be 3,250. This turned out to be a somewhat optimistic prediction. As of the writing of this post (September 21, 2020), there have been 4,634 reported COVID-19 deaths in China, and there is reason to believe that the actual number of deaths has been far higher (see, e.g. He et al., 2020, Tsang et al., 2020, Wadham and Jacobs, 2020).

Instead of publishing his methods or waiting to evaluate the veracity of his claims, Levitt signed up for multiple media interviews. Emboldened by “interest in his work” (who doesn’t want to interview a Nobel laureate?), he started making more predictions of the form “COVID-19 is not a threat and the pandemic is over”. On March 20th he said that “he will be surprised if the number of deaths in Israel surpasses 10“. Unfortunately, there have been 1,256 COVID-19 deaths in Israel so far with a massive increase in cases over the past few weeks and no end to the pandemic in sight. On March 28th, when Switzerland had 197 deaths, he predicted the pandemic was almost over and would end with 250. Switzerland are now seen 1,762 deaths and a recent dramatic increase in cases has overwhelmed hospitals in some regions leading to new lockdown measures. Levitt’s predictions have come loose and fast. On June 28th he predicted deaths in Brazil would plateau at 98,000. There have been over 137,000 deaths in Brazil with hundreds of people dying every day now. In Italy he predicted on March 28th that the pandemic was past its midpoint and deaths would end at 17,000 – 20,000. There have now been 35,707 deaths in Italy. The way he described the situation in the country at the time, when crematoria were overwhelmed, was “normal”.

I became aware of Levitt’s predictions via an email list of the Fellows of the International Society of Computational Biology on March 14th. I’ve been a Fellow for 3 years, and during this time I’ve received hardly any mail, except during Fellow nomination season. It was therefore somewhat of a surprise to start receiving emails from Michael Levitt regarding COVID-19, but it was a time when scientists were scrambling to figure out how they could help with the pandemic and I was excited at the prospect of all of us learning from each other and possibly helping out. Levitt began by sending around a PDF via a Dropbox link and asked for feedback. I wrote back right away suggesting he distribute the code used to make the figures, make clear the exact versions of data he was scraping to get the results (with dates and copies so the work could be replicated), suggested he add references and noted there were several typos (e.g. the formula clearly had wrong indices). I asked that he post it on the bioRxiv so it could receive community feedback, and suggested he fill in some details so I and others could better evaluate the methods (e.g. I pointed out that I thought the use of a Gaussian for

was problematic).

The initial correspondence rapidly turned into a flurry of email on the ISCB Fellows list. Levitt was full of advice. He suggested everyone wear a mask and I and others pushed back noting, as Dr. Anthony Fauci did at the time, that there was a severe shortage of masks and they should go to doctors first. Several exchanges centered on who to blame for the pandemic (one Fellow suggested immigrants in Italy). Among all of this, there was one constant: Levitt’s COVID-19 advice and predictions kept on coming, and without reflection or response to the well-meaning critiques. After Levitt said he’d be surprised if there were more than 10 deaths in Israel, and after he refused to send code reproducing his analyses, or post a preprint, I urged my fellow Fellows in ISCB to release a statement distancing our organization from his opinions, and emphasizing the need for rigorous, reproducible work. I was admonished by two colleagues and told, in so many words, to shut up.

Meanwhile, Levitt did not shut up. In March, after talking to Israeli newspapers about how he would be surprised if there were more than 10 deaths, he spoke directly to Israeli Prime Minister Benjamin Netanyahu to deliver his message that Israel was overreacting to the virus (he tried to speak to US president Donald Trump as well). Israel is now in a very dangerous situation with COVID-19 out of control. It has the highest number of cases per capita in the world. Did Levitt play a role in this by helping to convince Netanyahu to ease restrictions in the country in May? We may never know. There were likely many factors contributing to Israel’s current tragedy but Levitt, by virtue of speaking directly to Netanyahu, should be scrutinized for his actions. What we do know is that at the time, he was making predictions about the nature and expected course of the virus with unpublished methods (i.e. not even preprinted), poorly documented data, and without any possibility for anyone to reproduce any of his work. His disgraceful scholarship has not improved in the subsequent months. He did, eventually post a preprint, but the data tab states “all data to be made available” and there is the following paragraph relating to availability of code:

We would like to make the computer codes we use available to all but these are currently written in a variety of languages that few would want to use. While Dr. Scaiewicz uses clean self-documenting Jupyter Python notebook code, Dr. Levitt still develops in a FORTRAN dialect call Mortran (Mortran 1975) that he has used since 1980. The Mortran preprocessor produces Fortran that is then converted to C-code using f2c. This code is at least a hundred-fold faster than Python code. His other favorite language is more modern, but involves the use of the now deprecated language Perl and Unix shell scripts.

Nevertheless, the methods proposed here are simple; they are easily and quickly implemented by a skilled programmer. Should there be interest, we would be happy to help others develop the code and test them against ours. We also realize that there is ample room for code optimization. Some of the things that we have considered are pre-calculating sums of terms to convert computation of the correlation coefficient from a sum over N terms to the difference of two sums. Another way to speed the code would be to use hierarchical step sizes in a binary search to find the value of lnN that gives the best straight line.

Our study involving as it did a small group working in different time zones and under extreme time pressure revealed that scientific computation nowadays faces a Babel of computer languages. In some ways this is good as we generally re-coded things rather than struggle with the favorite language of others. Still, we worry about the future of science when so many different tools are used. In this work we used Python for data wrangling and some plotting, Perl and Unix shell tools for data manipulation, Mortran (effectively C++) for the main calculations, xmgrace and gnuplot for other plotting, Excel (and Openoffice) for playing with data. And this diversity is for a group of three!

tl;dr, there is no code. I’ve asked Michael Levitt repeatedly for the code to reproduce the figures in his paper and have not received it. I can’t reproduce his plots.

Levitt now lies when confronted about his misguided and wrong prediction about COVID-19 in Israel. He claims it is a “red herring”, and that he was talking about “excess deaths”. I guess he figures he can hide behind Hebrew. There is a recording where anyone can hear him being asked directly if he is saying he will be surprised with more than 10 COVID-19 deaths in Israel, and his answers is very clear: “I will be very surprised”. It is profoundly demoralizing to discover that a person you respected is a liar, a demagogue or worse. Sadly, this has happened to me before.

Levitt continues to put people’s lives at risk by spewing lethal nonsense. He is suggesting that we should let COVID-19 spread in the population so it will mutate to be less harmful. This is nonsense. He is promoting anti-vax conspiracy theories that are nonsense. He is promoting nonsense conspiracy theories about scientists. And yet, he continues to have a prominent voice. It’s not hard to see why. The article, similar to all the others where he is interviewed, begins with “Nobel Prize winner…”

In the Talmud, in Mishnah Sanhedrin 4:9, it is written “Whoever destroys a soul, it is considered as if he destroyed an entire world”. I thought of this when listening to an interview with Michael Levitt that took place in May, where he said:

I am a real baby-boomer, I was born in 1947, and I think we’ve really screwed up. We cause pollution, we allowed the world’s population to increase three-fold, we’ve caused the problems of global warming, we’ve left your generation with a real mess in order to save a really small number of very old people. If I was a young person now, I would say, “now you guys are gonna pay for this.”

Rapid testing has been a powerful tool to control COVID-19 outbreaks around the world (see Iceland, Germany, …). While many countries support testing through government sponsored healthcare infrastructure, in the United States COVID-19 testing has largely been organized and provided by for-profit businesses. While financial incentives coupled with social commitment have motivated many scientists and engineers at companies and universities to work hard around the clock to facilitate testing, there are also many individuals who have succumbed to greed. Opportunism has bubbled to the surface and scams, swindles, rackets, misdirection and fraud abound. This is happening at a time when workplaces are in desperate need of testing, and demands for testing are likely to increase as schools, colleges and universities start opening up in the next month. Here are some examples of what is going on:

- First and foremost there is your basic fraud. In July, a company called “Fillakit”, which had been awarded a $10.5 million federal contract to make COVID-19 test kits, was shipping unusable, contaminated, soda bottles. This “business”, started by some law and real estate guy called Paul Wexler, who has been repeatedly accused of fraud, went under two months after it launched amidst a slew of investigations and complaints from former workers. Oh, BTW, Michigan ordered 322,000 Fillakit tubes which went straight to the trash (as a result they could not do a week worth of tests).

- Not all fraud is large scale. Some former VP at now defunct “Cure Cannabis Solutions” ordered 100 COVID-19 test kits that do who-knows-what at a price of 50c a kit. The Feds seized it. These kits, which were not FDA approved, were sourced from “Anhui DeepBlue Medical” in Hefei, China.

- To be fair, the Cannabis guy was small fry. In Laredo Texas, some guy called Robert Castañeda received assistance from a congressman to purchase $500,000 of kits from the same place! Anhui DeepBlue Medical sent Castańeda 20,000 kits ($25 a test). Apparently the tests had 20% accuracy. To his credit, the Cannabis guy paid 1/50th the price for this junk.

- Let’s not forget who is really raking in the bucks though. Quest Diagnostics and LabCorp are the primary testing outfits in the US right now; each is processing around 150,000 tests a day. These are for-profit companies and indeed they are making a profit. The economics is simple: insurance companies reimburse LabCorp and Quest Diagnostics for the tests. The rates are basically determined by the amount that Medicare will pay, i.e. the government price point. Intiially, the reimbursement was set at $51, and well… at that price LabCorp and Quest Diagnostics just weren’t that interested. I mean, people have to put food on the table, right? (Adam Schechter, CEO of LabCorp makes $4.9 million a year; Steve Rusckowski, CEO of Quest Diagnostics, makes $9.9 million a year). So the Medicare reimbursement rate was raised to $100. The thing is, LabCorp and Quest Diagnostics get paid regardless of how long it takes to return test results. Some people are currently waiting 15 days to get results (narrator: such tests results are useless).

- Perhaps a silver lining lies in the stock price of these companies. The title of this post is “$ How to Profit From COVID-19 Testing $”. I guess being able to take a week or two to return a test result and still get paid $100 for something that cost $30 lifts the stock price… and you can profit!

- Let’s not forget the tech bros! A bunch of dudes in Utah from companies like Nomi, Domo and Qualtrics signed a two-month contract with the state of Utah to provide 3,000 tests a day. One of the tech executives pushing the initiative, called TestUtah, was a 37-year old founder (of Nomi Health) by the name of Mark Newman. He admitted that “none of us knew anything about lab testing at the start of the effort”. Didn’t stop Newman et al. from signing more than $50 million in agreements with several states to provide testing. tl;dr: the tests had a poor limit of detection, samples were mishandled, throughput was much lower than promised etc. etc. and as a result they weren’t finding positive cases at rates similar to other testing facilities. The significance is summarized poignantly in a New Yorker piece about the debacle:

“I might be sick, but I want to go see my grandma, who’s ninety-five. So I go to a TestUtah site, and I get tested. TestUtah tells me I’m negative. I go see grandma, and she gets sick from me because my result was wrong, because TestUtah ran an unvalidated test.”

P.S. There has been a disturbing TestUtah hydroxycholorquine story going on behind the scenes. I include this fact because no post on fraud and COVID-19 would be complete without a mention of hydroxycholoroquine.

- Maybe though, the tech bros will save the day. The recently launched $5 million COVID-19 X-prize is supposed to incentivize the development of “Frequent. Fast. Cheap. Easy.” COVID-19 testing. The goal is nothing less than to “radically change the world.” I’m hopeful, but I just hope they don’t cancel it like they did the genome prize. After all, their goal of “500 tests per week with 12 hour turnaround from sample to result” is likely to be outpaced by innovation just like what happened with genome sequencing. So in terms of making money from COVID-19 testing don’t hold your breath with this prize.

- As is evident from the examples above, one of the obstacles to quick riches with COVID-19 testing in the USA is the FDA. The thing about COVID-19 testing is that lying to the FDA on applications, or providing unauthorized tests, can lead to unpleasantries, e.g. jail. So some play it straight and narrow. Consider, for example, SeqOnce, which has developed the Azureseq SARS-CoV-2 RT-qPCR kit. These guys have an “EUA-FDA validated test”:

This is exactly what you want! You can click on “Order Now” and pay $3,000 for a kit that can be used to test several hundred samples (great price!) and the site has all the necessary information: IFUs (these are “instructions for use” that come with FDA authorized tests), validation results etc. If you look carefully you’ll see that administration of the test requires FDA approval. The company is upfront about this. Except the test is not FDA authorized; this is easy to confirm by examining the FDA Coronavirus EUA site. One can infer from a press release that they have submitted an EUA (Emergency Use Authorization) but while they claim it has been validated, nowhere does it say it has been authorized.Clever eh? Authorized, validated, authorized, validated, authorized, .. and here I was just about to spend $3,000 for a bunch of tests that cannot be currently legally administered. Whew!At least this is not fraud. Maybe it’s better called… I don’t know… a game? Other companies are playing similar games. Gingko Bioworks is advertising “testing at scale, supporting schools and businesses” with an “Easy to use FDA-authorized test” but again this seems to be a product that has “launched“, not one that, you know, actually exists; I could find no Gingko Bioworks test that works at scale that is authorized on the FDA Coronavirus EUA website, and it turns out that what they mean by FDA authorized is an RT-PCR test that they have outsourced to others. Fingers crossed though- maybe the marketing helped CEO Jason Kelly raise the $70 million his company has received for the effort; I certainly hope it works (soon)! - By the way, I mentioned that the SeqOnce operation is a bunch of “guys”. I meant this literally; this is their “team”:

Just one sec… what is up with biotech startups and 100% men leadership teams? See Epinomics, Insight Genetics, Ocean Genomics, Kailos Genetics, Circulogene, etc. etc.)… And what’s up with the black and white thing? Is that to try to hide that there are no people of color?

I mention the 100% male team because if you look at all the people mentioned in this post, all of them are guys (except the person in the next sentence), and I didn’t plan that, it just how it worked out. Look, I’m not casting shade on the former CEO of Theranos. I’m just saying that there is a strong correlation here.Sorry, back to the regular programming…

- Speaking of swindlers and scammers, this post would not be complete without a mention of the COVID-19 testing czar, Jared Kushner. His secret testing plan for the United States went “poof into thin air“! I felt that the 1 million contaminated and unusable Chinese test kits that he ordered for $52 million deserved the final mention in this post. Sadly, these failed kits appear to be the main thrust of the federal response to COVID-19 testing needs so far, and consistent with Trump’s recent call to, “slow the testing down” (he wasn’t kidding). Let’s see what turns up today at the hearings of the U.S. House Select Subcommittee on Coronavirus, whose agenda is “The Urgent Need for a National Plan to Contain the Coronavirus”.

Five years ago on this day, Nicolas Bray and I wrote a blog post on The network nonsense of Manolis Kellis in which we described the paper Feizi et al. 2013 from the Kellis lab as dishonest and fraudulent. Specifically, we explained that:

“Feizi et al. have written a paper that appears to be about inference of edges in networks based on a theoretically justifiable model but

- the method used to obtain the results in the paper is completely different than the idealized version sold in the main text of the paper and

- the method actually used has parameters that need to be set, yet no approach to setting them is provided. Even worse,

- the authors appear to have deliberately tried to hide the existence of the parameters. It looks like

- the reason for covering up the existence of parameters is that the parameters were tuned to obtain the results. Moreover,

- the results are not reproducible. The provided data and software is not enough to replicate even a single figure in the paper. This is disturbing because

- the performance of the method on the simplest of all examples, a correlation matrix arising from a Gaussian graphical model, is poor.”

A second point we made is that the justification for the method, which the authors called “network deconvolution” was nonsense. For example, the authors wrote that “The model assumes that networks are “linear time-invariant flow-preserving operators.” Perhaps I take things too literally when I read papers but I have to admit that five years later I still don’t understand the sentence. However just because a method is ad-hoc, heuristic, or perhaps poorly explained, doesn’t mean it won’t work well in practice. In the blog post we compared network deconvolution to regularized partial correlation on simulated data, and found network deconvolution performed poorly. But in a responding comment, Kellis noted that “in our experience, partial correlation performed very poorly in practice.” He added that “We have heard very positive feedback from many other scientists using our software successfully in diverse applications.”

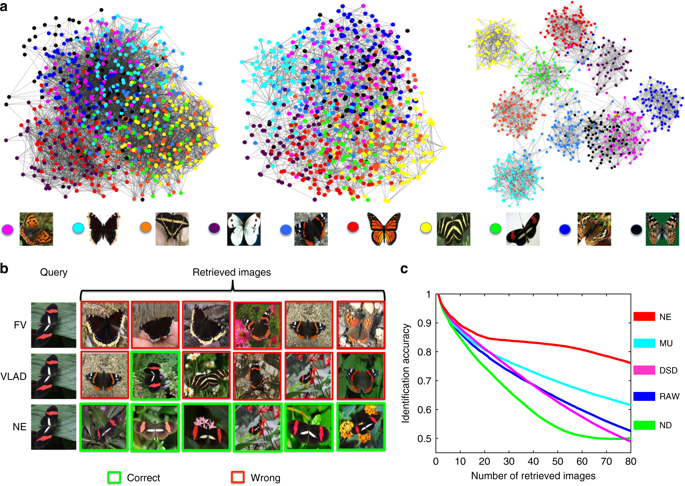

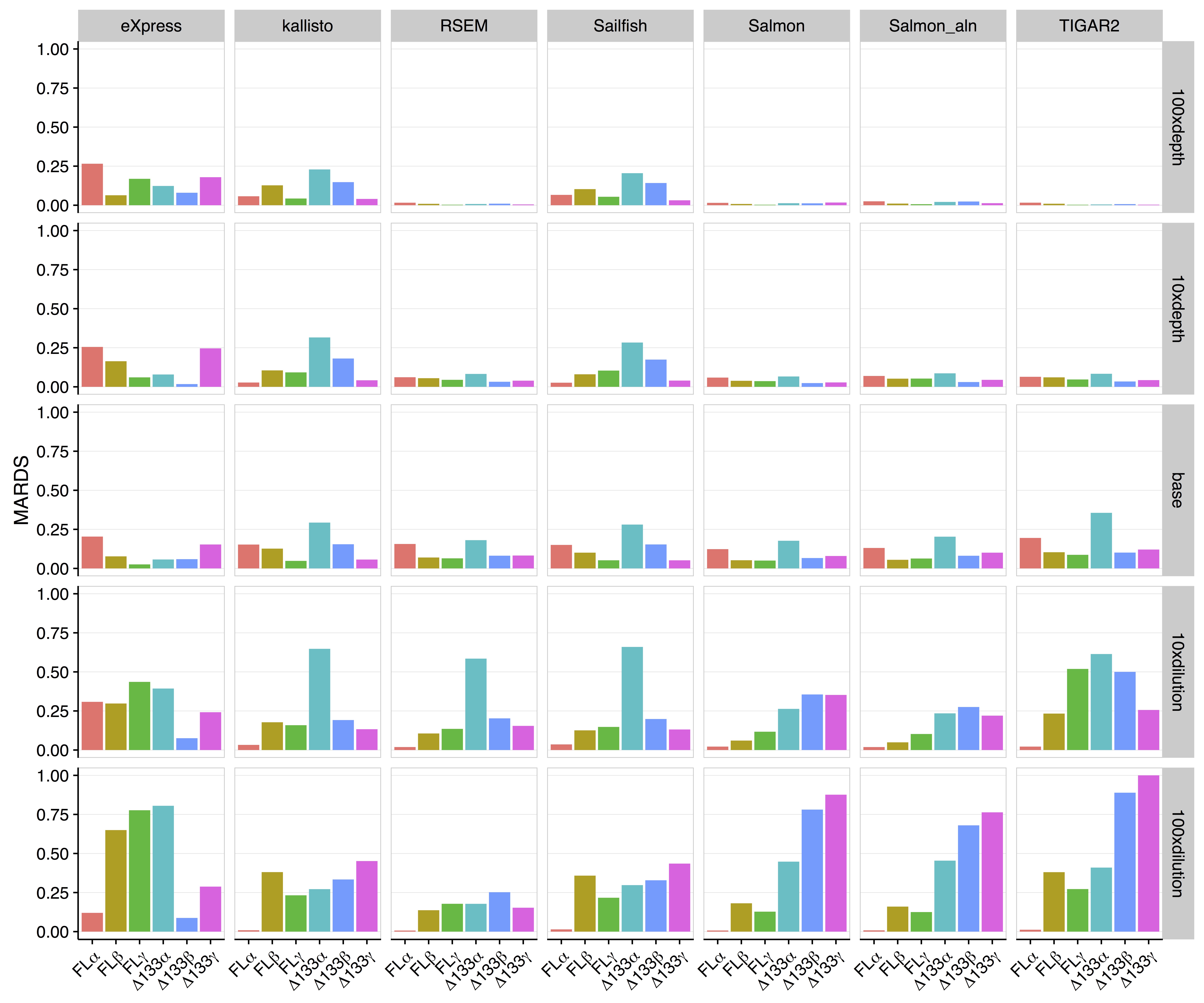

Fortunately we can now evaluate Kellis’ claims in light of an independent analysis in Wang, Pourshafeie, Zitnik et al. 2018, a paper from the groups of Serafim Batzoglou and Jure Leskovec (in collaboration with Carlos Bustamante) at Stanford University. There are three main results presented in Wang, Pourshafeie and Zitnik et al. 2018 that summarize the benchmarking of network deconvolution and other methods, and I reproduce figures showing the results below. The first shows the performance of network deconvolution and some other network denoising methods on a problem of butterfly species identification (network deconvolution is abbreviated ND and is shown in green). RAW (in blue) is the original unprocessed network. Network deconvolution is much worse than RAW:

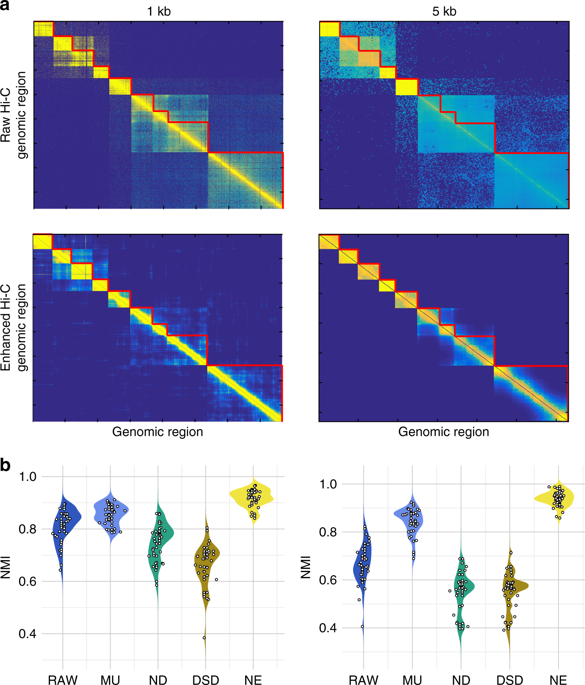

The second illustrates the performance of network denoising methods on Hi-C data. The performance metric in this case is normalized mutual information (NMI) which Wang, Pourshafeie, Zitnik et al. described as “a fair representation of overall performance”. Network deconvolution (ND, dark green) is again worse than RAW (dark blue):

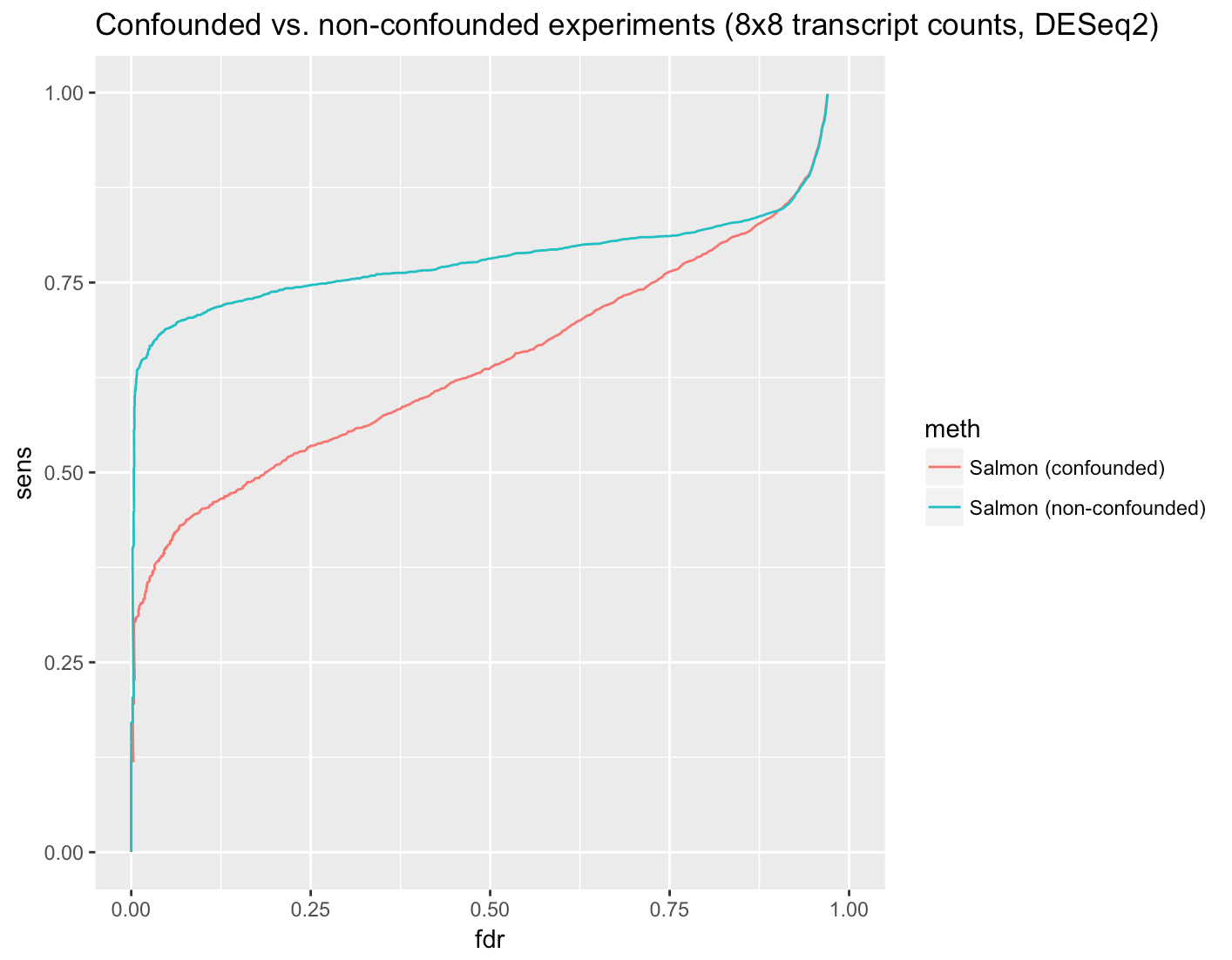

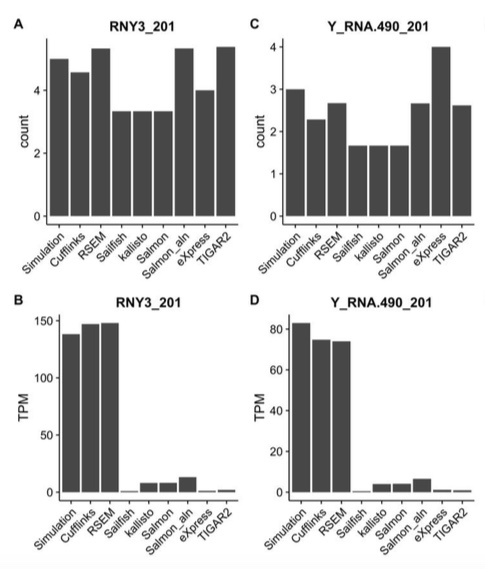

Finally, in an analysis of gene function from tissue-specific gene interaction networks, ND (blue) does perform better than RAW (pink) although barely. In four cases out of eight shown it is the worst of the four methods benchmarked:

Network deconvolution was claimed to be applicable to any network when it was published. At the time, Feizi stated that “We applied it to gene networks, protein folding, and co-authorship social networks, but our method is general and applicable to many other network science problems.” A promising claim, but in reality it is difficult to beat the nonsense law: Nonsense methods tend to produce nonsense results.

The Feizi et al. 2013 paper now has 178 citations, most of them drive by citations. Interestingly this number, 178 is exactly the number of citations of the Barzel et al. 2013 network nonsense paper, which was published in the same issue of Nature Biotechnology. Presumably this reflects the fact that authors citing one paper feel obliged to cite the other. These pair of papers were thus an impact factor win for the journal. For the first authors on the papers, the network deconvolution/silencing work is their most highly cited first author papers respectively. Barzel is an assistant professor at Bar-Ilan University where he links to an article about his network nonsense on his “media page”. Feizi is an assistant professor at the University of Maryland where he lists Feizi et al. 2013 among his “selected publications“. Kellis teaches the “network deconvolution” and its associated nonsense in his computational biology course at MIT. And why not? These days truth seems to matter less and less in every domain. A statement doesn’t have to be true, it just has to work well on YouTube, Twitter, Facebook, or some webpage, and as long as some people believe it long enough, say until the next grant cycle, promotion evaluation, or election, then what harm is done? A win-win for everyone. Except science.

Six years ago I received an email from a colleague in the mathematics department at UC Berkeley asking me whether he should participate in a study that involved “collecting DNA from the brightest minds in the fields of theoretical physics and mathematics.” I later learned that the codename for the study was “Project Einstein“, an initiative of entrepreneur Jonathan Rothberg with the goal of finding the genetic basis for “math genius”. After replying to my colleague I received an inquiry from another professor in the department, and then another and another… All were clearly flattered that they were selected for their “brightest mind”, and curious to understand the genetic secret of their brilliance.

I counseled my colleagues not to participate in this ill-advised genome-wide association study. The phenotype was ill-defined and in any case the study would be underpowered (only 400 “geniuses” were solicited), but I believe many of them sent in their samples. As far as I know their DNA now languishes in one of Jonathan Rothberg’s freezers. No result has ever emerged from “Project Einstein”, and I’d pretty much forgotten about the ego-driven inquiries I had received years ago. Then, last week, I remembered them when reading a series of blog posts and associated commentary on evolutionary biology by some of the most distinguished mathematicians in the world.

1. Sir Timothy Gowers is blogging about evolutionary biology?

It turns out that mathematicians such as Timothy Gowers and Terence Tao are hosting discussions about evolutionary biology (see On the recently removed paper from the New York Journal of Mathematics, Has an uncomfortable truth been suppressed, Additional thoughts on the Ted Hill paper) because some mathematician wrote a paper titled “An Evolutionary Theory for the Variability Hypothesis“, and an ensuing publication kerfuffle has the mathematics community up in arms. I’ll get to that in a moment, but first I want to focus on the scientific discourse in these elite math blogs. If you scroll to the bottom of the blog posts you’ll see hundreds of comments, many written by eminent mathematicians who are engaged in pseudoscientific speculation littered with sexist tropes. The number of inane comments is astonishing. For example, in a comment on Timothy Gowers’ blog, Gabriel Nivasch, a lecturer at Ariel University writes

“It’s also ironic that what causes so much controversy is not humans having descended from apes, which since Darwin people sort-of managed to swallow, but rather the relatively minor issue of differences between the sexes.”

This person’s understanding of the theory of evolution is where the Victorian public was at in England ca. 1871:

In mathematics, just a year later in 1872, Karl Weierstrass published what at the time was considered another monstrosity, one that threw the entire mathematics community into disarray. The result was just as counterintuitive for mathematics as Darwin’s theory of evolution was for biology. Weierstrass had constructed a function that is uniformly continuous on the real line, but not differentiable on any interval:

.

Not only does this construction remain valid today as it was back then, but lots of mathematics has been developed in its wake. What is certain is that if one doesn’t understand the first thing about Weierstrass’ construction, e.g. one doesn’t know what a derivative is, one won’t be able to contribute meaningfully to modern research in analysis. With that in mind consider the level of ignorance of someone who does not even understand the notion of common ancestor in evolutionary biology, and who presumes that biologists have been idle and have learned nothing during the last 150 years. Imagine the hubris of mathematicians spewing incoherent theories about sexual selection when they literally don’t know anything about human genetics or evolutionary biology, and haven’t read any of the relevant scientific literature about the subject they are rambling about. You don’t have to imagine. Just go and read the Tao and Gowers blogs and the hundreds of comments they have accrued over the past few days.

2. Hijacking a journal

To understand what is going on requires an introduction to Igor Rivin, a professor of mathematics at Temple University and, of relevance in this mathematics matter, an editor of the New York Journal of Mathematics (NYJM) [Update November 21, 2018: Igor Rivin is no longer an editor of NYJM]. Last year Rivin invited the author of a paper on the variability hypothesis to submit his work to NYJM. He solicited two reviews and published it in the journal. For a mathematics paper such a process is standard practice at NYJM, but in this case the facts point to Igor Rivin hijacking the editorial process to advance a sexist agenda. To wit:

- The paper in question, “An Evolutionary Theory for the Variability Hypothesis” is not a mathematics or biology paper but rather a sexist opinion piece. As such it was not suitable for publication in any mathematics or biology journal, let alone in the NYJM which is a venue for publication of pure mathematics.

- Editor Igor Rivin did not understand the topic and therefore had no business soliciting or handling review of the paper.

- The “reviewers” of the paper were not experts in the relevant mathematics or biology.